Chaining Language and Knowledge Resources with LLM(s)

a tutorial at ROCLING 2023

Shu-Kai Hsieh 謝舒凱

Graduate Institute of Linguistics/Brain and Mind, NTU

Piner Chen and Dachen Lian 陳品而 連大成

Graduate Institute of Linguistics, NTU

今天要談的主題

Language Resources and Large Language Models: possible linkages

Hands-on code session

背景

Language Models

Probability distributions over sequences of words, tokens or characters (Shannon, 1948; 1951)

As a core task in NLP, often framed as next token prediction.

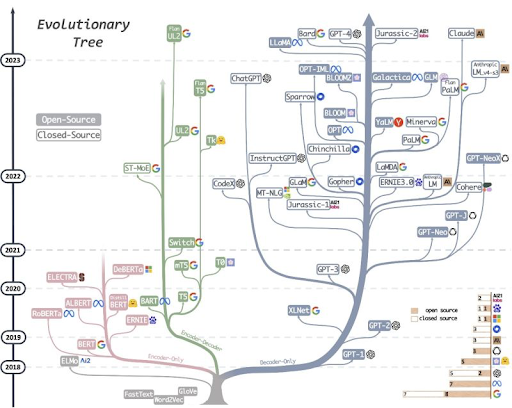

預訓練大型語言模型橫空出世

Pre-trained Large Language Models

- Transformer-based pre-trained Large Language Models changed NLP/the world

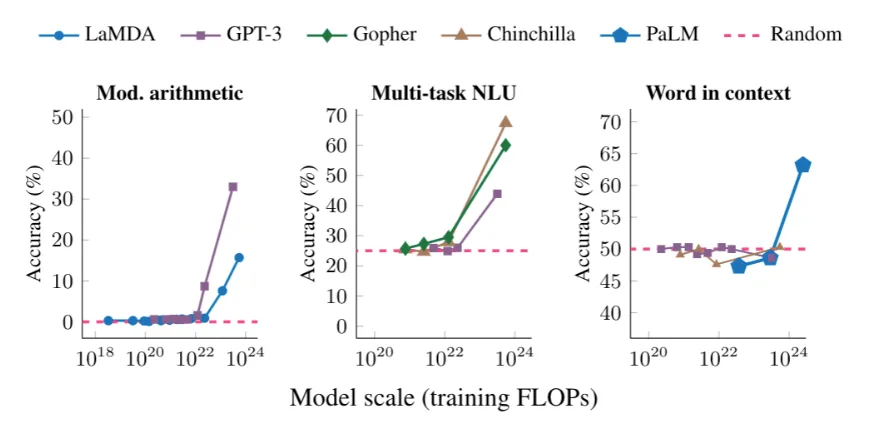

- Emergent Abilities in LLM : Unpredictable Abilities in Large Language Models From generation to understanding?

直到大型語言模型開始出現結構理解的頓悟行為 (emergence)之後, 開始有推理能力的期待。

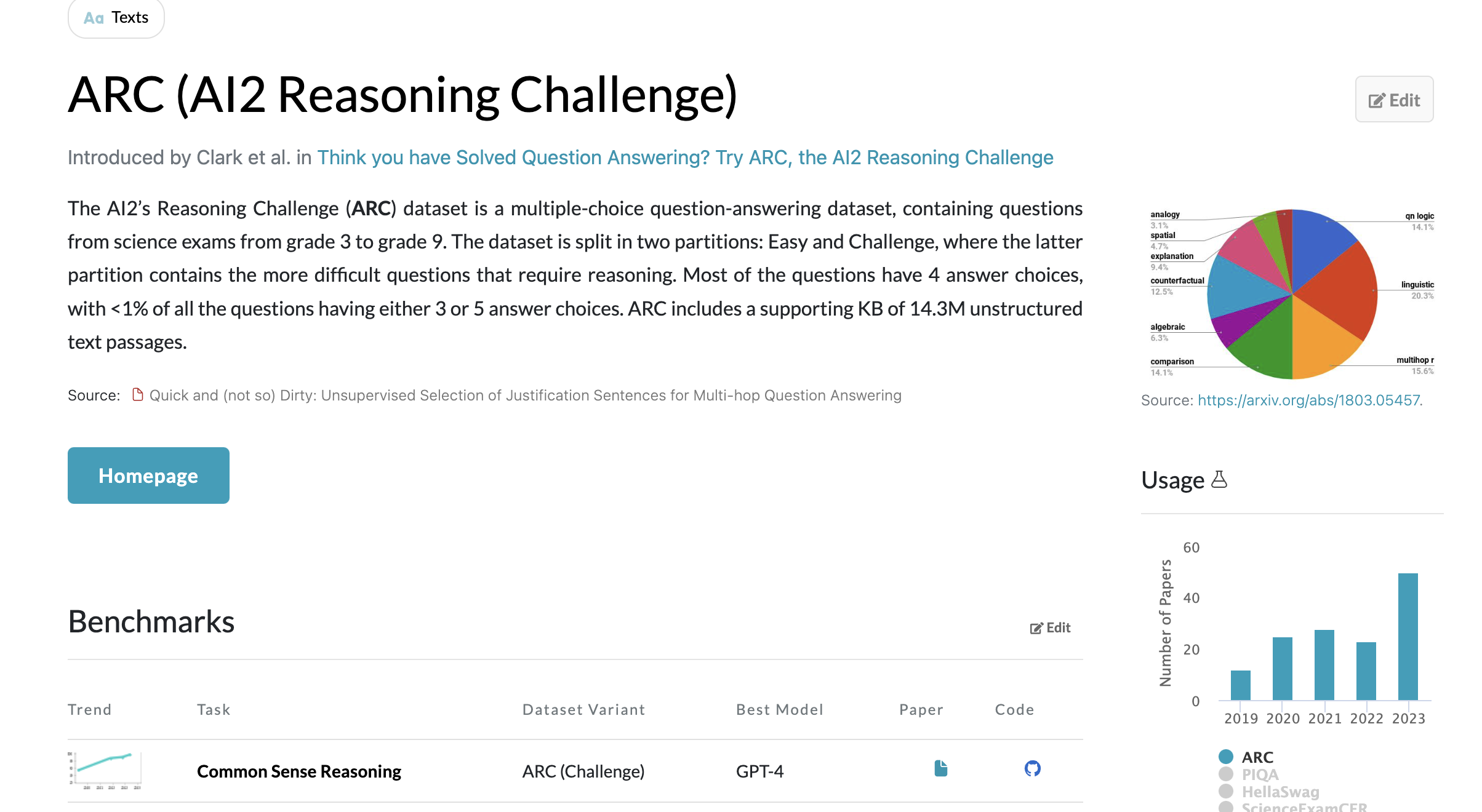

Science exam reasoning

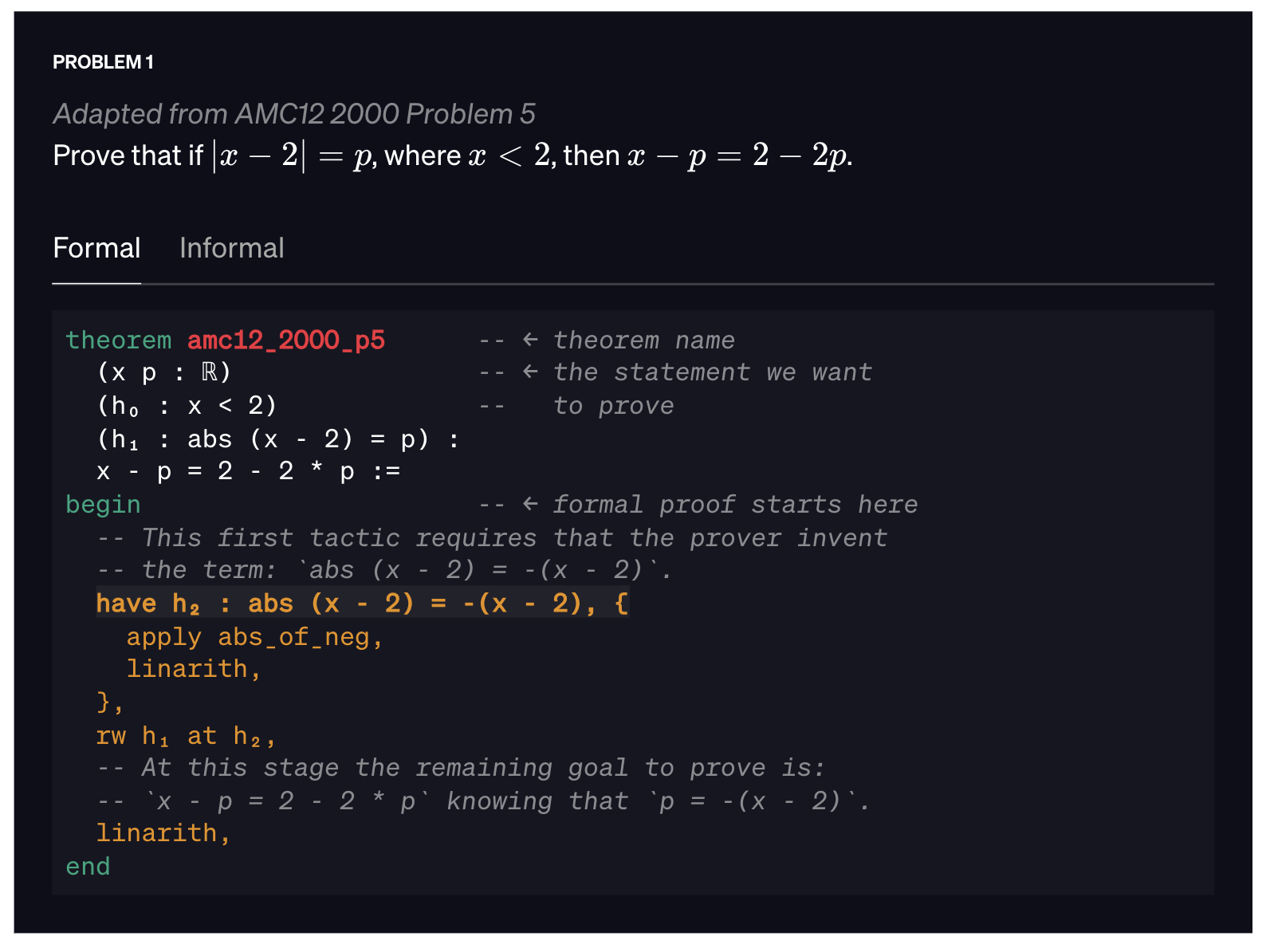

Mathematical reasoning

Iterative Reasoning and Cultural Imagination

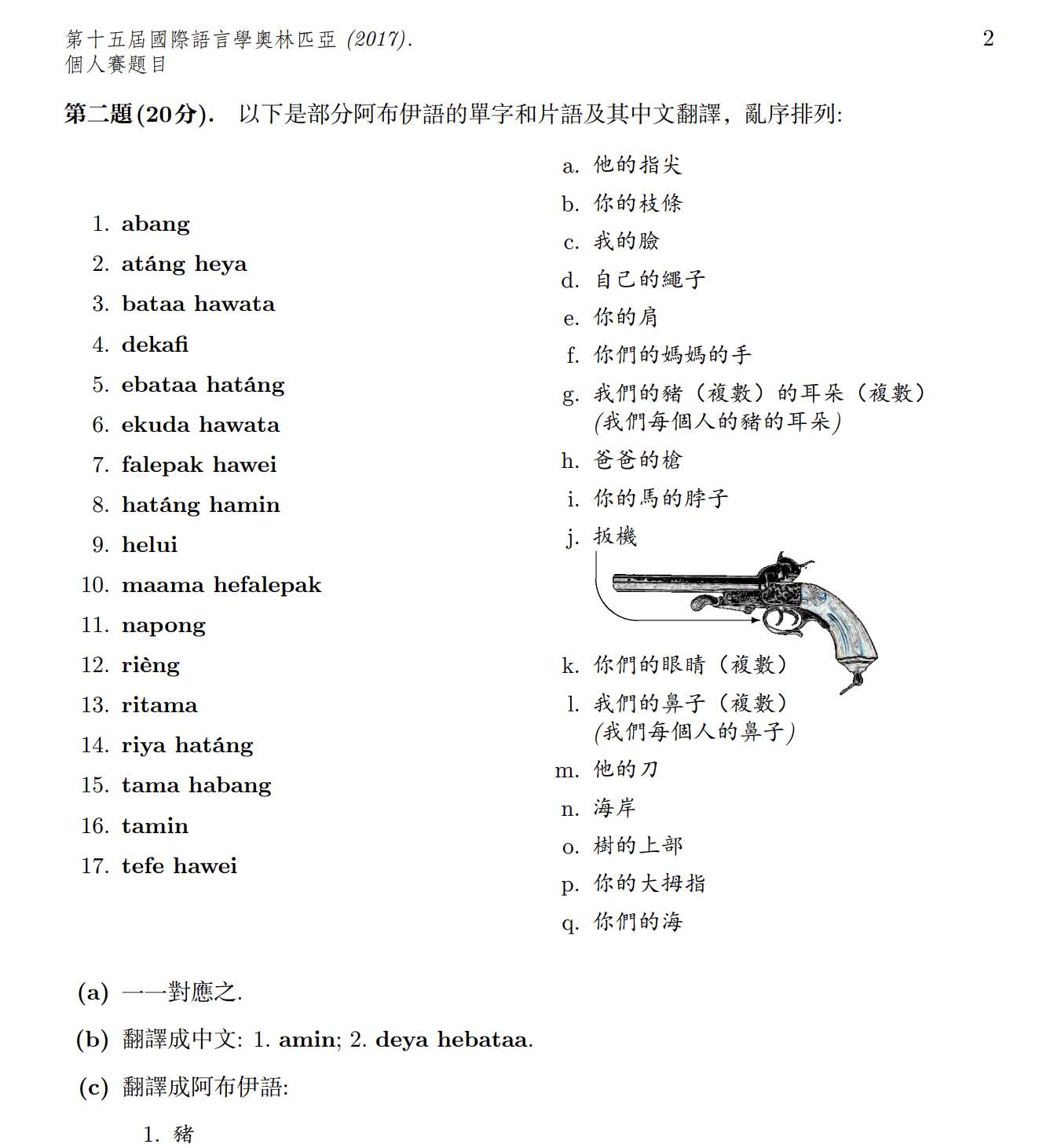

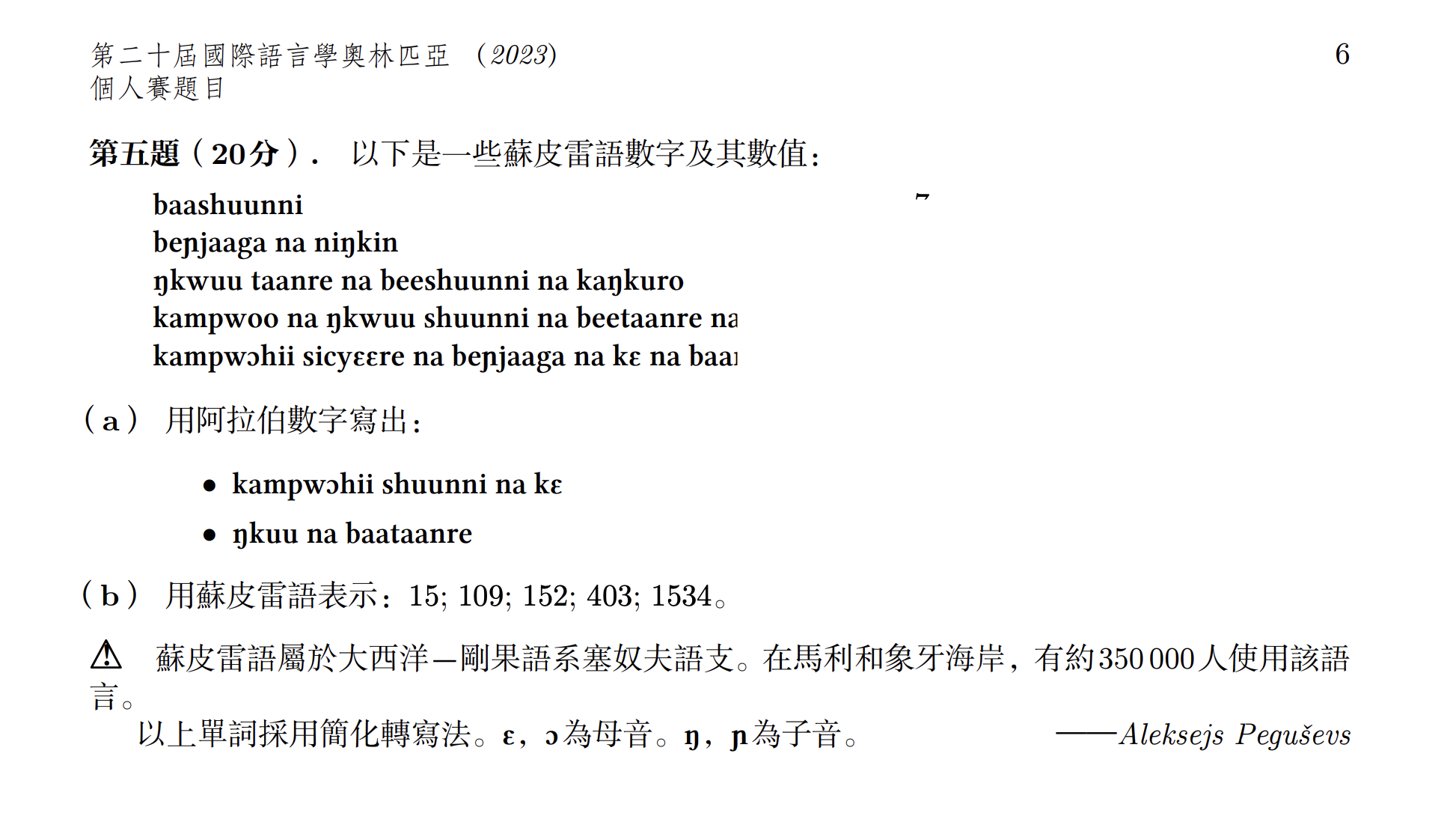

Rosetta Stone Problems

跨符碼類型推理

結果還差強人意

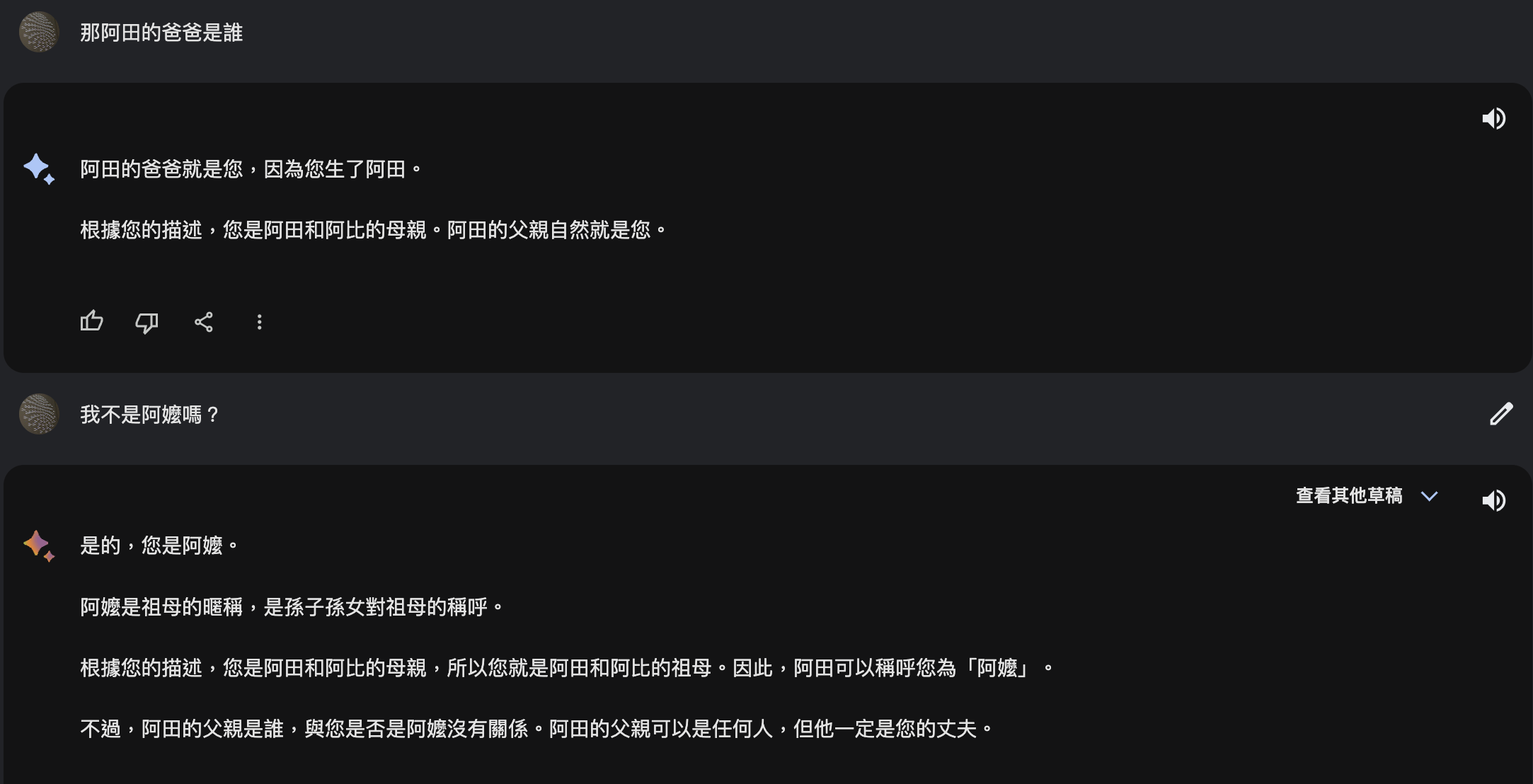

幻覺與知識阻斷

hallucination and knowledge-cutoff

- 事實幻覺(也許還好解決)

推理、判斷與假說幻覺則很棘手

BART’s hallucination (每一家的幻覺都蠻嚴重的)

可愛的錯誤是沒關係,但在重要的決策(如:法律親屬繼承關係)就出大事

持續學習

Life-long learning for Human and Machine

- 一個需要回答的技術哲學的問題:我們期待一個通才的 AI 還是專才的 AI 們? (可以用我們現在期待的人類社會來想像)

- 讓機器與人類一起學習,可以協助人類發想、開創與演化。而這就是語言與知識資源教養 AI 的時代意義。

Here are the images illustrating language and knowledge resources as the food for AI:

那麼,什麼是語言與知識資源

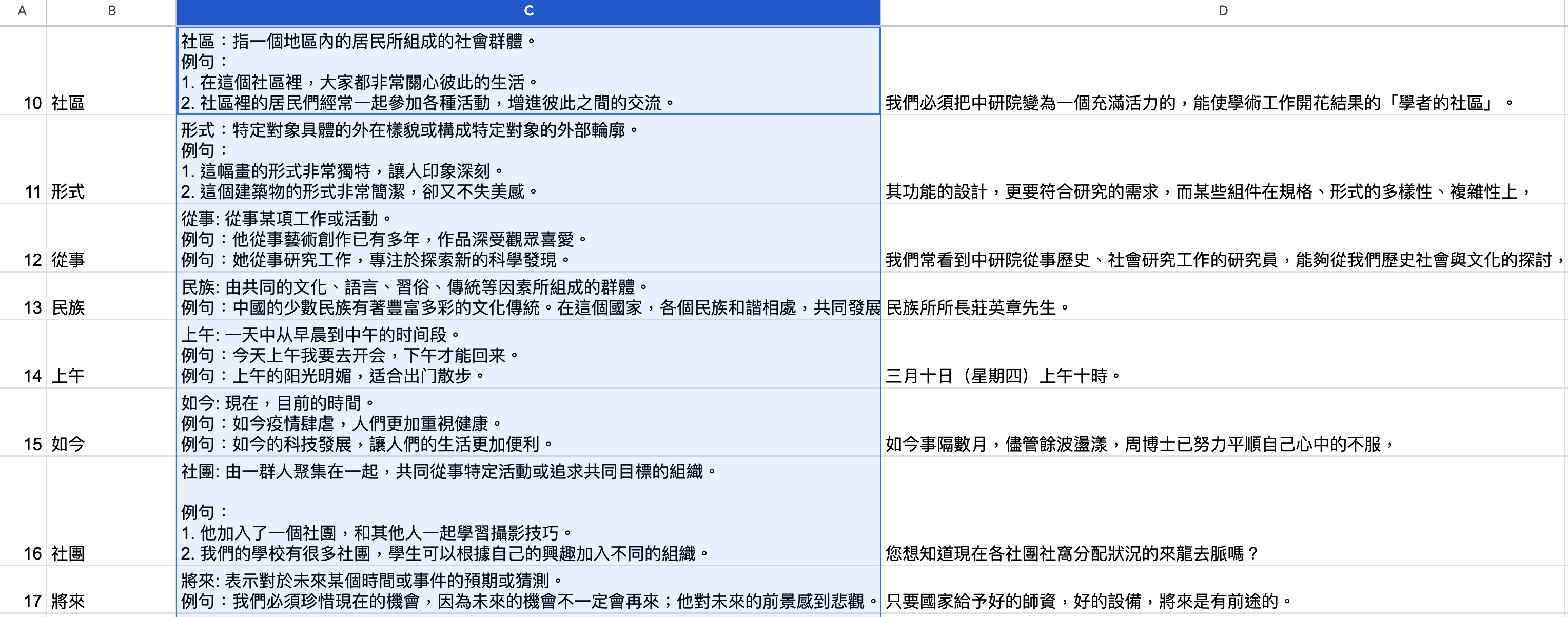

Language (and Knowledge) Resources in linguistics and language technology

- Collection, processing and evaluation of digital form of language use.

數據 data, 工具 tools, 經驗 advice

- corpora and lexical (semantic) resources (e.g. wordnet, framenet, e-Hownet, Conceptnet, BabelNet, ..), …

- tagger, parser, chunker, …

- metadata, evaluation metrics, …..

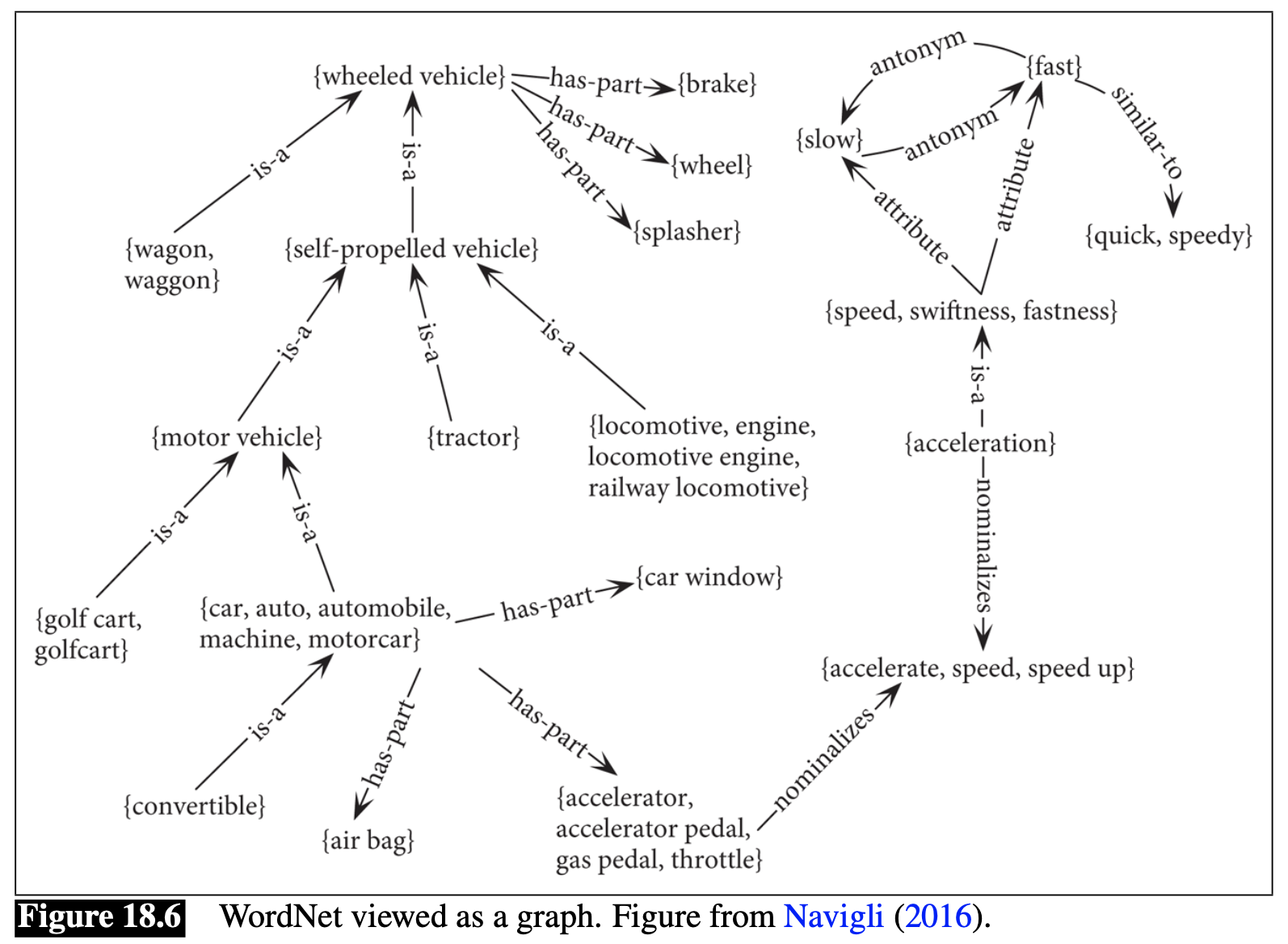

我們以詞彙網路舉例

WordNet architecture: two core components:

- Synset (synonymous set)

- Paradigmatic lexical (semantic) relations: hyponymy/hypernymy; meronymy/holonymy, etc

Chinese Wordnet

Follow PWN (in comparison with Sinica BOW)

Word segmentation principle (Huang, Hsieh, and Chen 2017)

Corpus-based decision

Manually created (sense distinction, gloss with controlled vocabulary, etc)

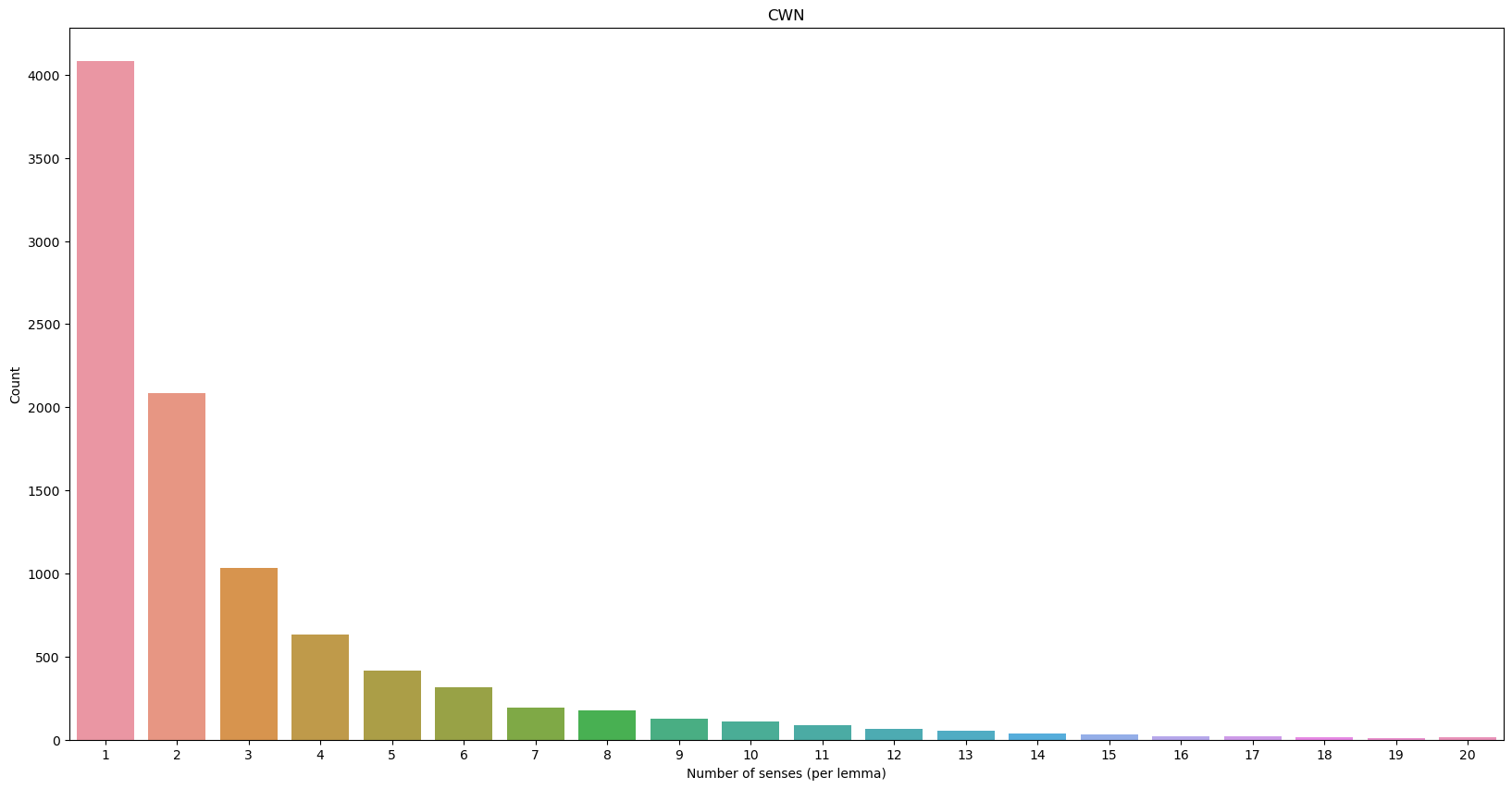

Chinese Wordnet

- The status quo: latest release 2022, website

Theories

Some new perspectives in CWN

sense granularity, relation discovery, glos and annotation in parallel

Distinction of meaning facets and senses

埔里種的【茶】很好喝

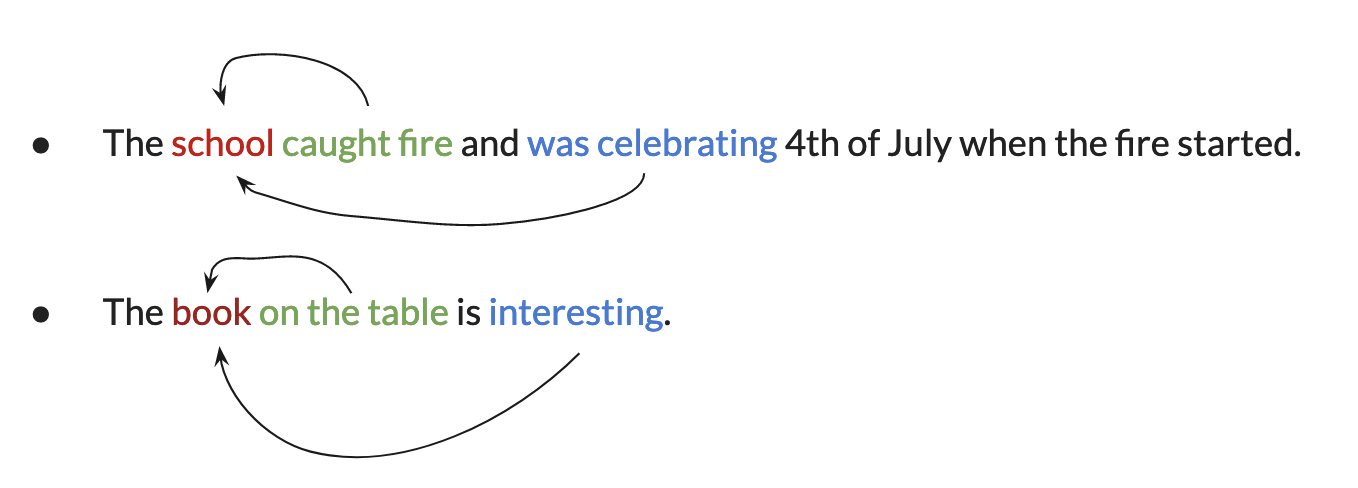

Co-predicative Nouns

a phenomenon where two or more predicates seem to require that their argument denotes different things.

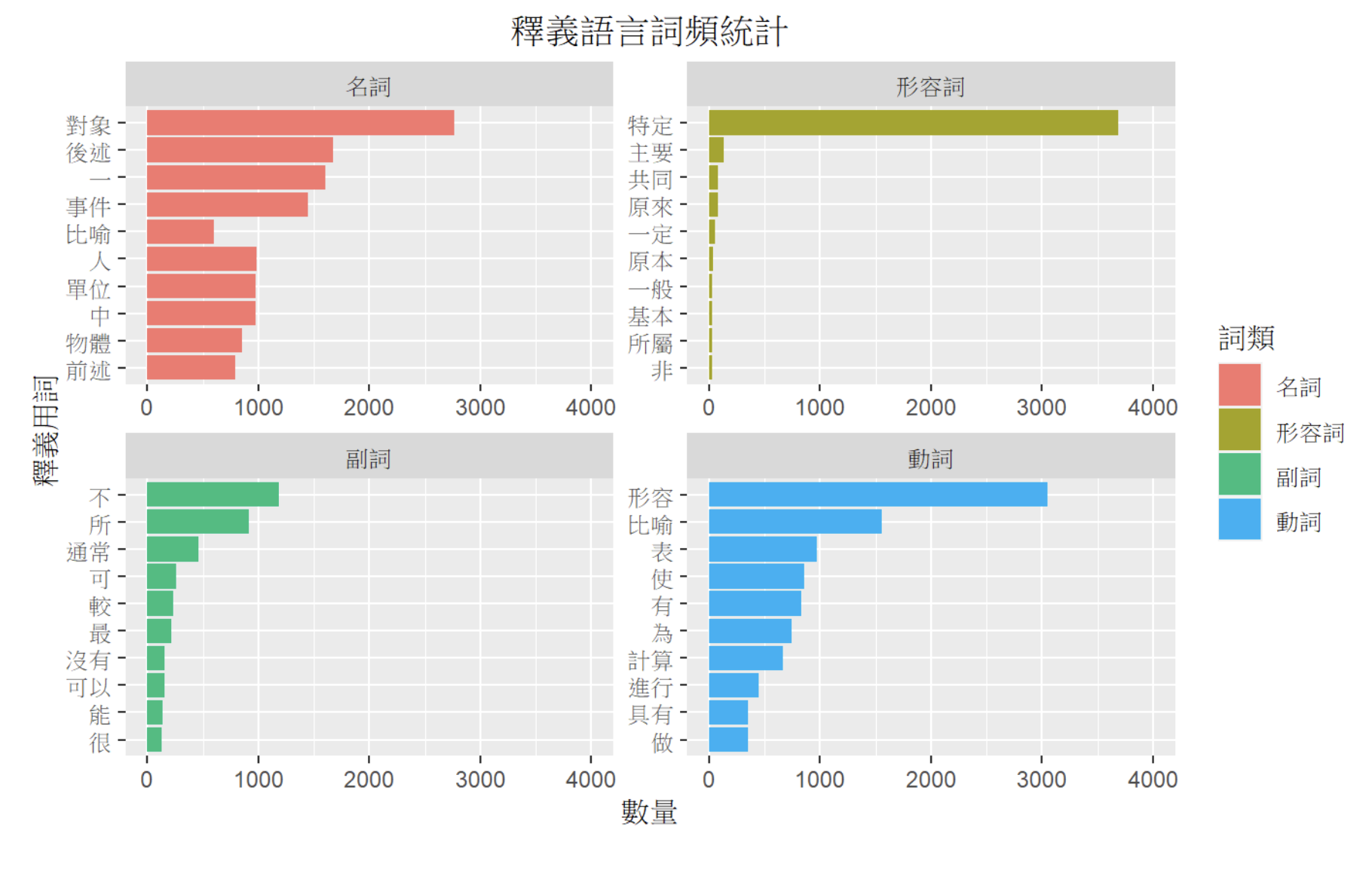

Gloss as lexicographic resources with add-ons annotations

Gloss (lexicographic definition) is carefully controlled with limited vocabulary and lexical patterns, e.g.,

- Verbs with

VHtag (i.e., stative intransitive verbs) are glossed with “形容 or 比喻形容 …”. - Adverbs are glossed with “表…”

- Verbs with

collocational information, pragmatic information (‘tone’, etc) are recorded as additional annotation.

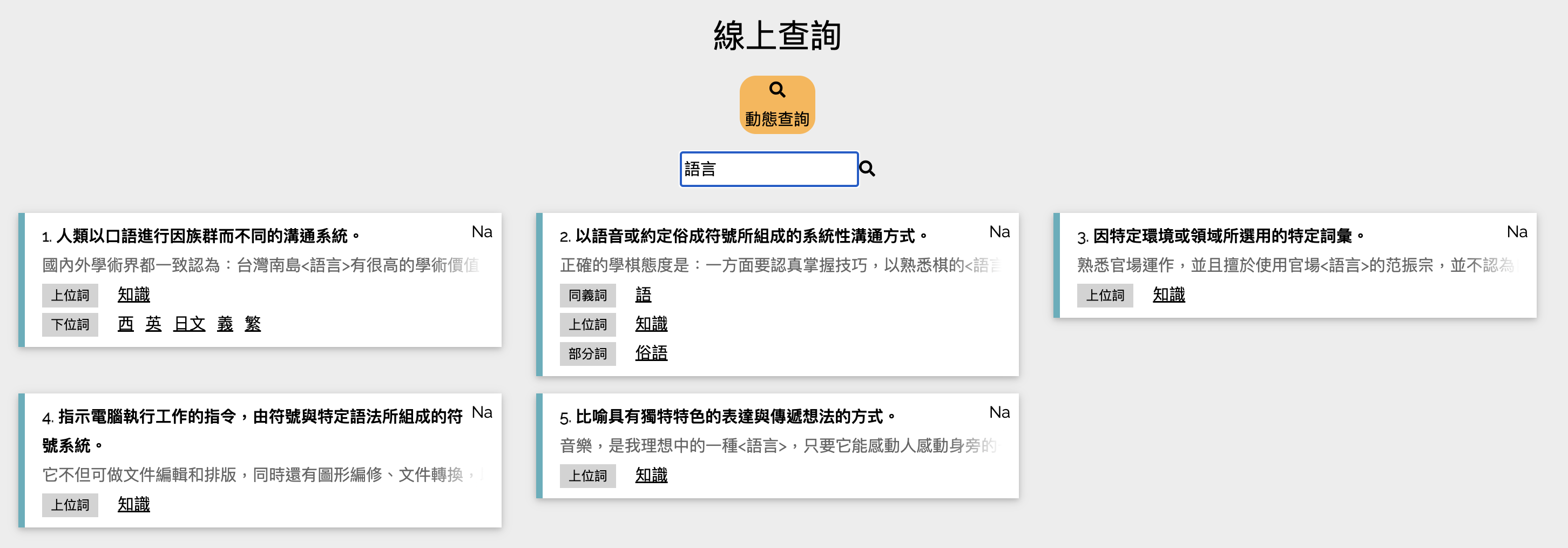

CWN 2.0 Search

- The most comprehensive and fine-grained sense repository and network in Chinese

CWN 2.0 Programmable Search

- API and doc freely available

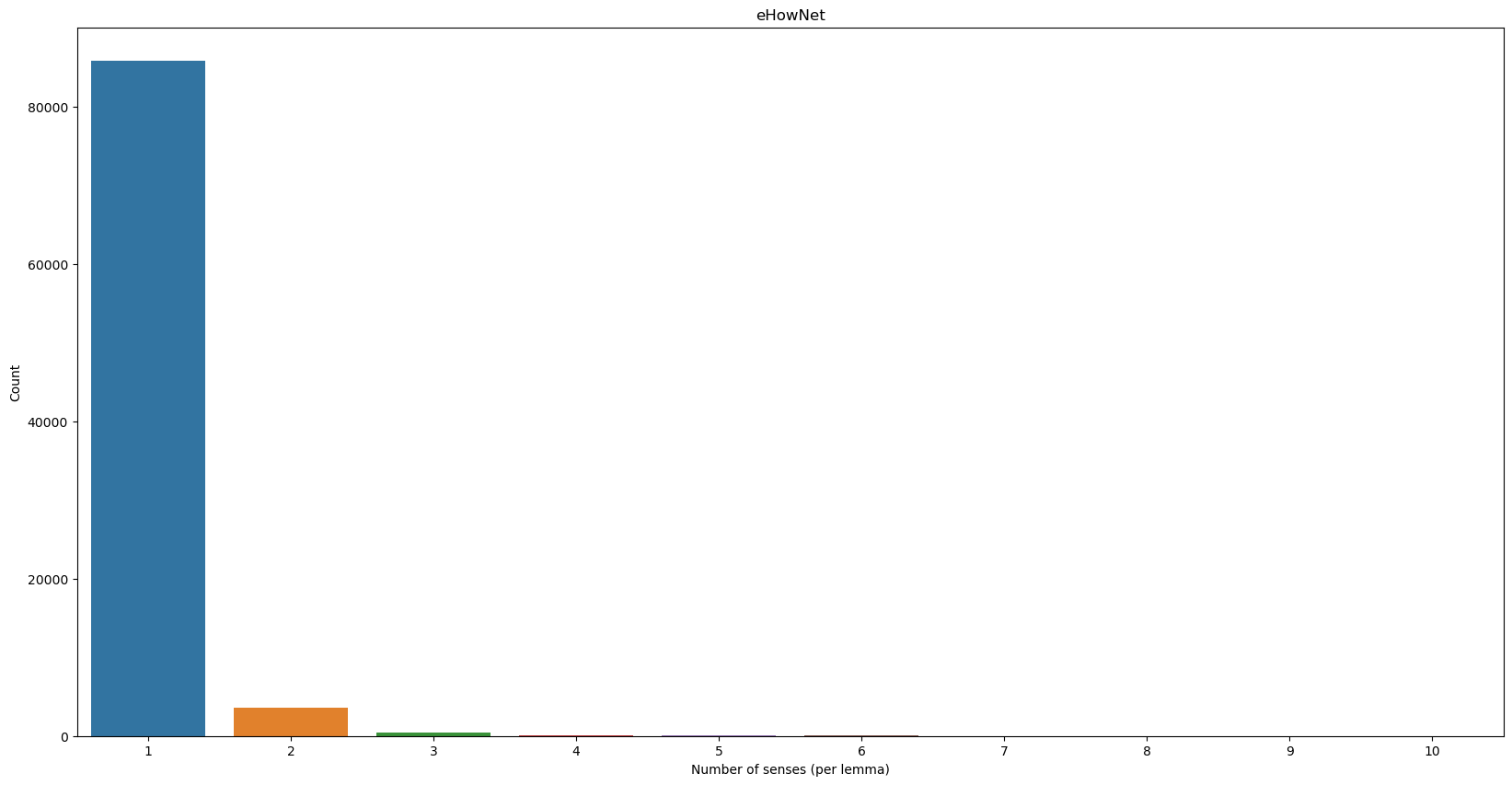

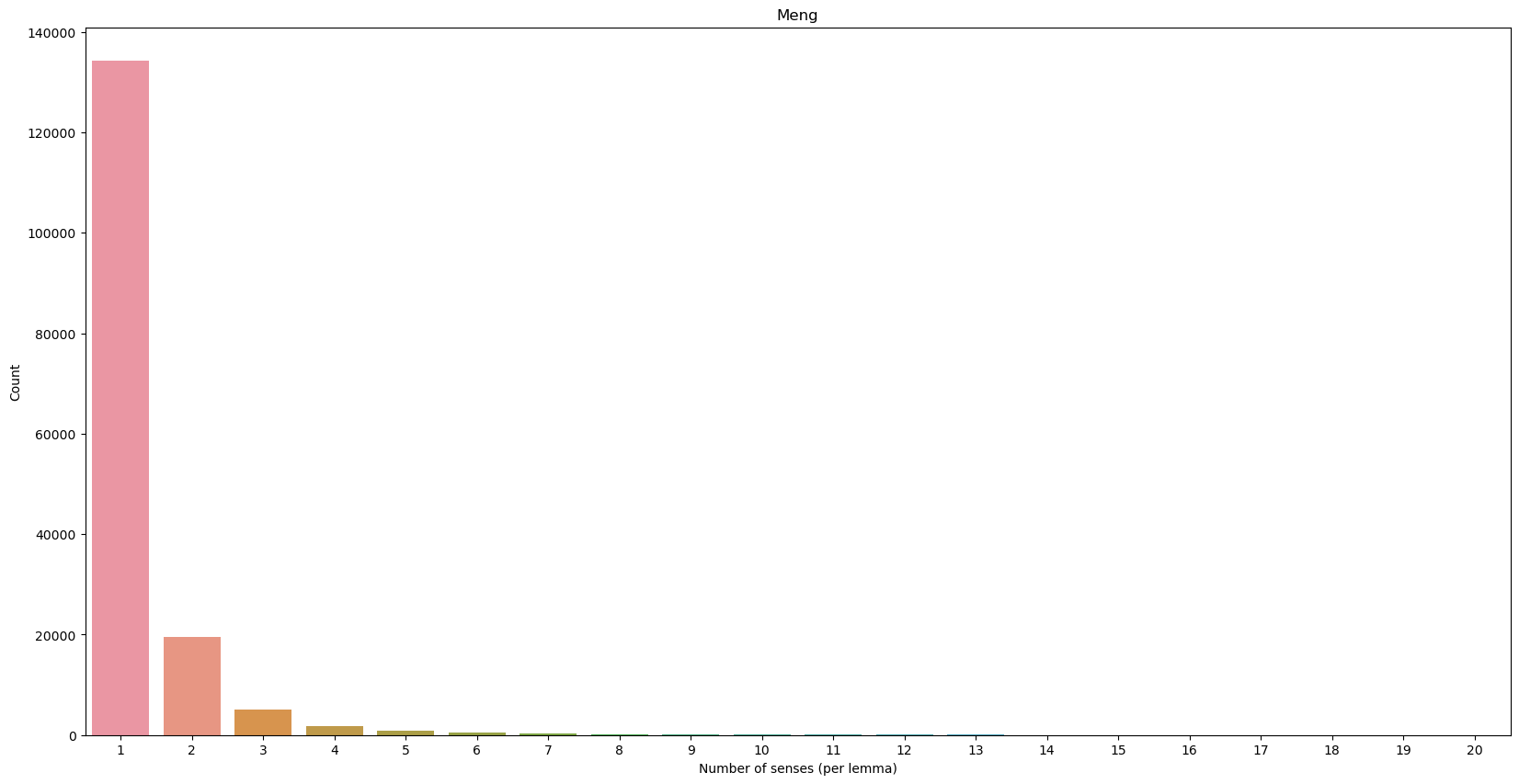

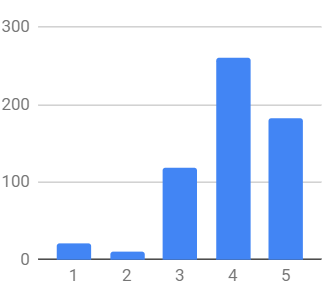

Zipf’s law (no surprise)

- Most words have small number of senses (Zipf’s law)

Comparison to other Chinese lexical resources

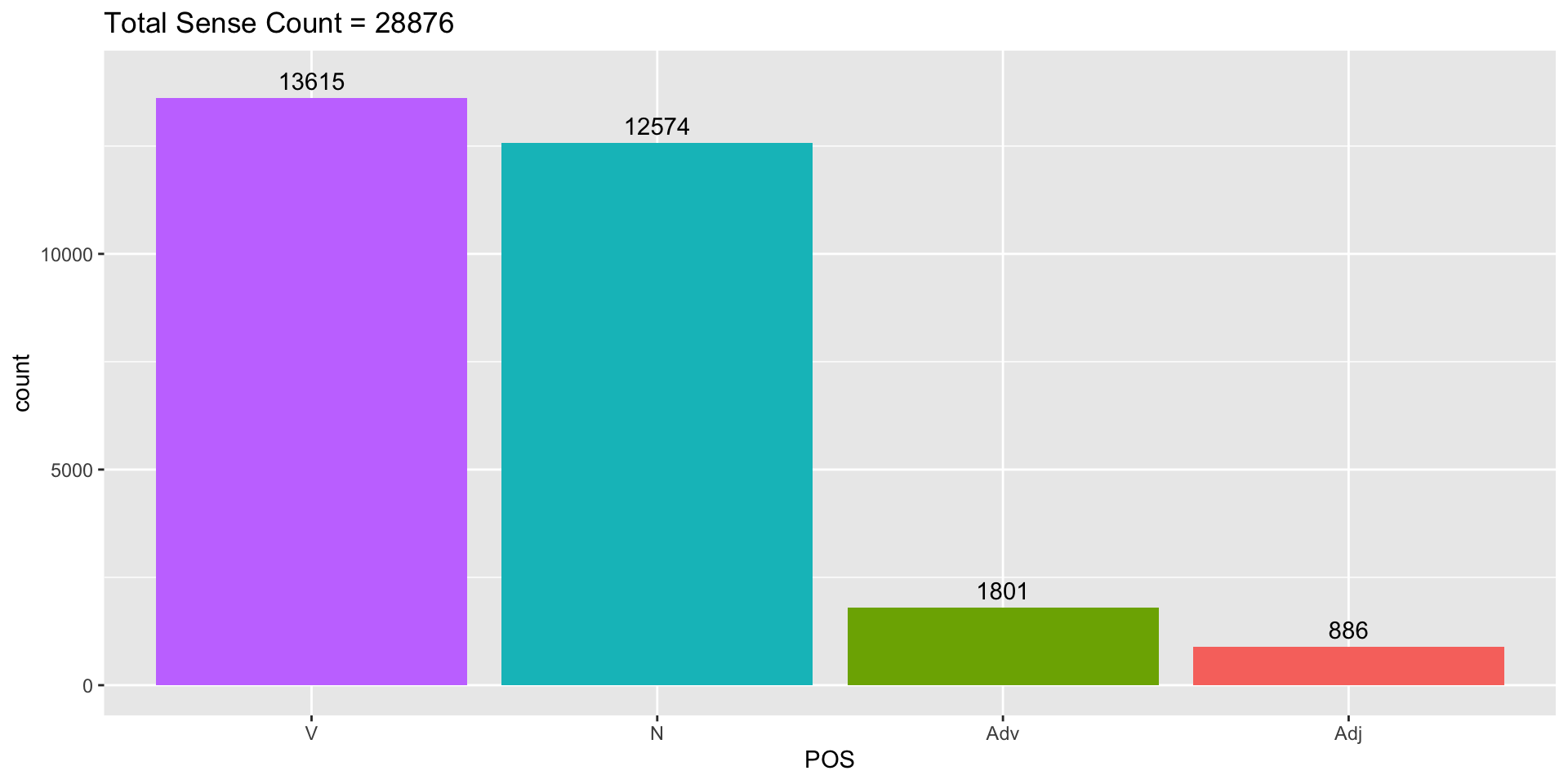

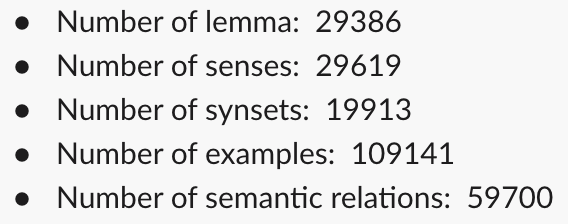

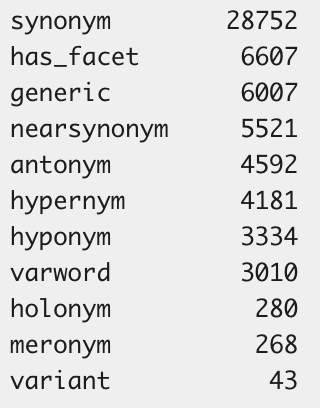

Data summary 1/1

Data summary 2/2

Figure 2 further demonstrates the distribution of different types of relations

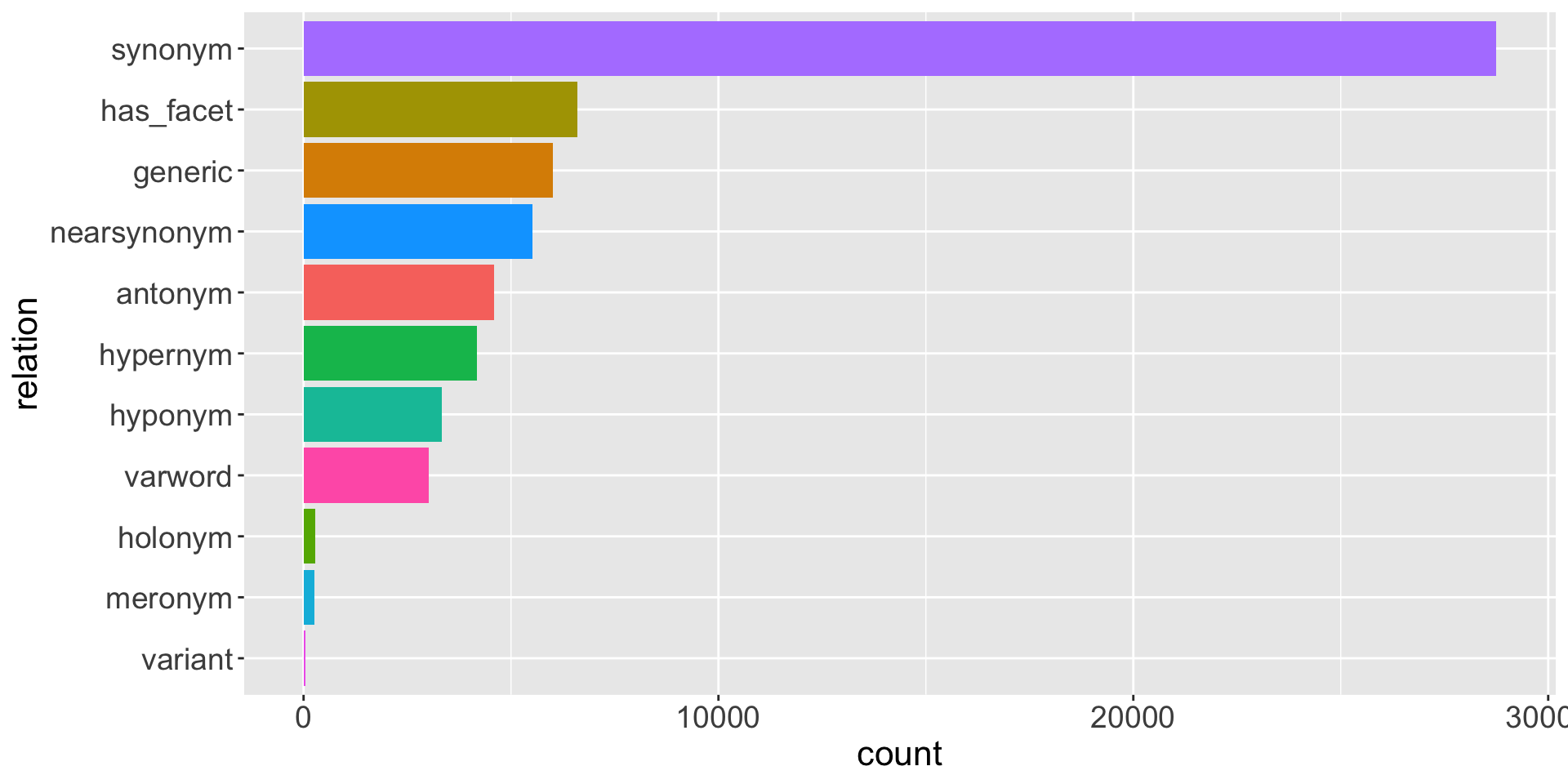

CWN 2.0

Visualization

CWN 2.0

sense tagger

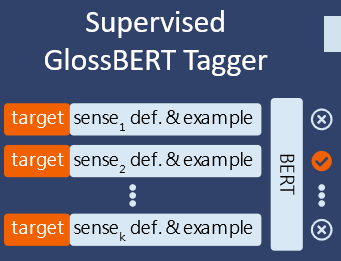

Transformer-based sense tagger

Leveraging wordnet glosses using

GlossBert(huang2019glossbert?), a BERT model for word sense disambiguation with gloss knowledge.Our extended

GlossBertmodel on CWN gloss+ SemCor reports 82% accuracy.

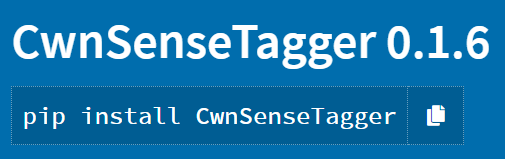

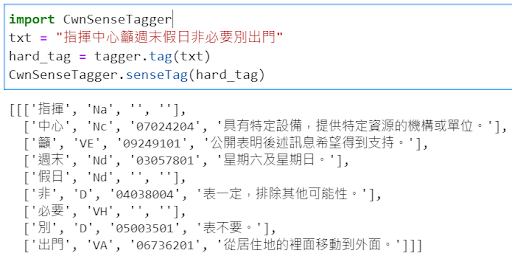

Word Sense Tagger

- APIs (GlossBert version) released in 2021

CWN 2.0

Chinese SemCor

- semi-automatically curated sense-tagged corpus based on Academic Sinica Balanced Corpus (ASBC) s.

CWN-based applications

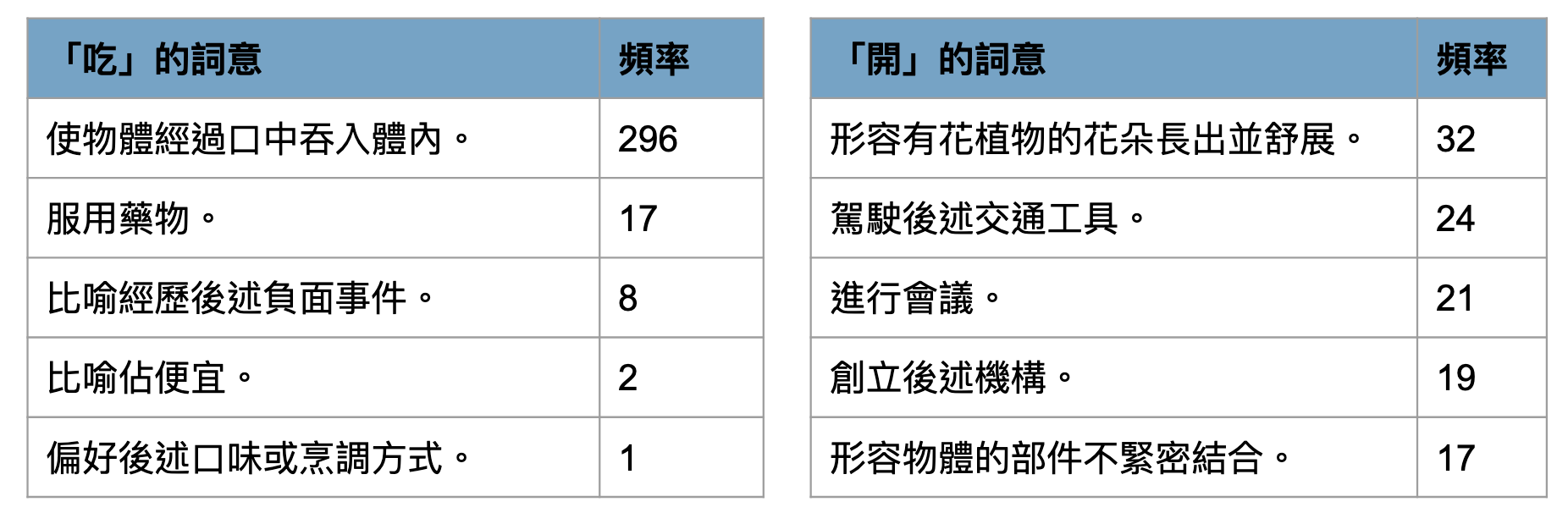

sense frequency distribution in corpus

- Now we have chance to empirically explore the dominance of word senses in language use, which is essential for both lexical semantic and psycholinguistic studies.

- e.g., ‘開’ (kai1,‘open’) has (surprisingly) more dominant blossom sense over others (based on randomly chosen 300 sentences in ASBC corpus)

CWN-based applications

word sense acquisition

credit:郭懷元同學

CWN-based applications

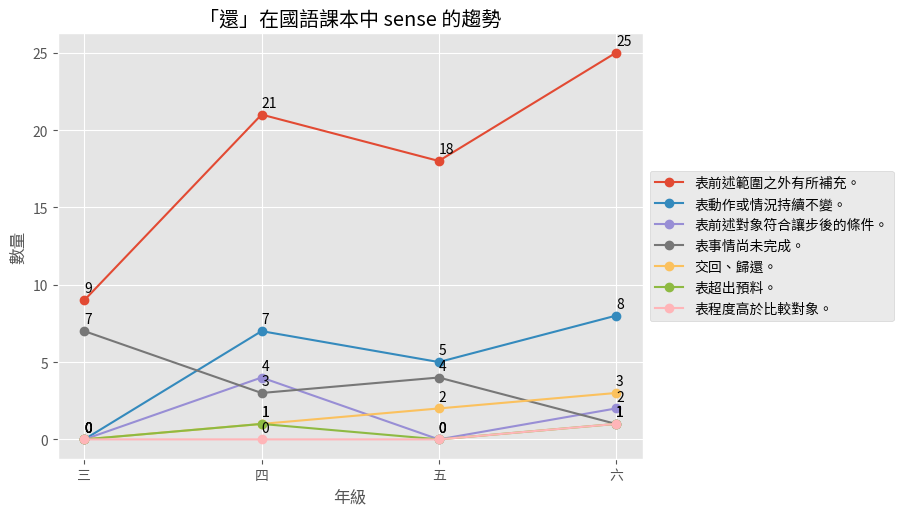

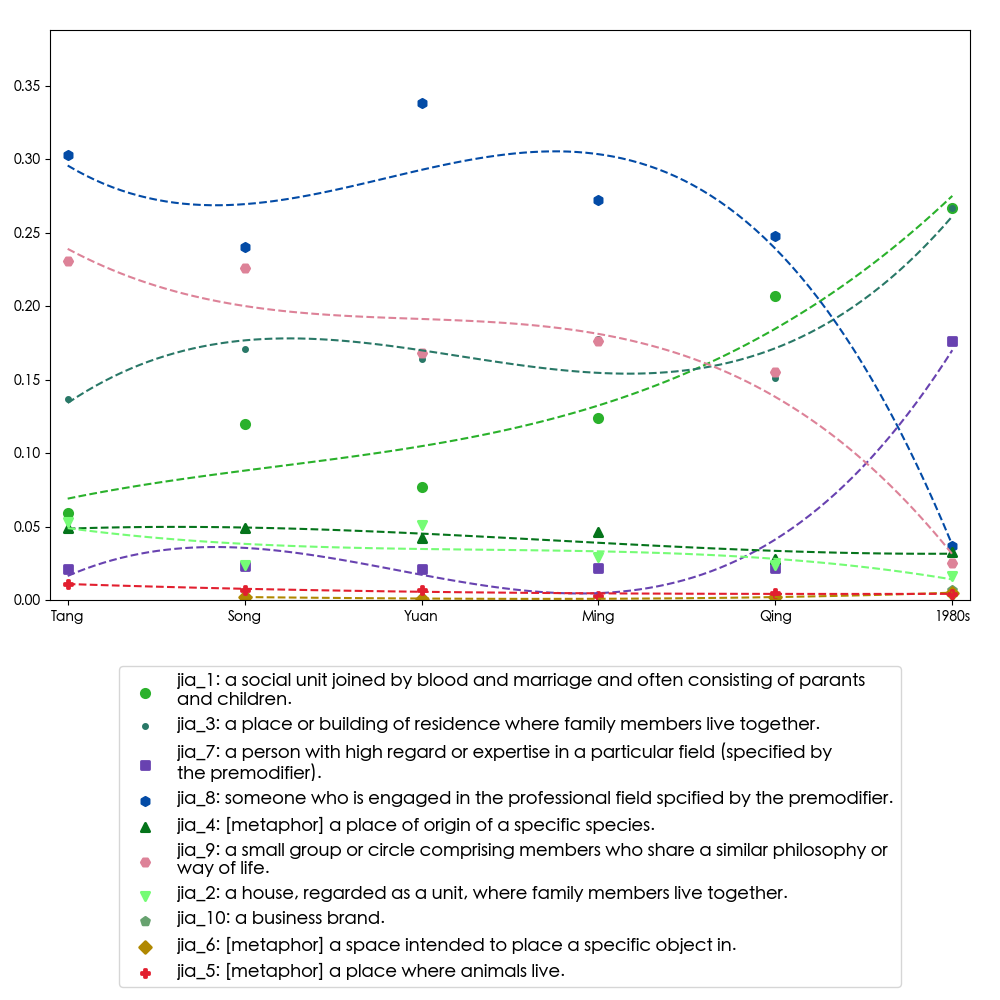

tracking sense evolution

- The indeterminate nature of Chinese affixoids

- Sense status of 家 jiā from the Tang dynasty to the 1980s

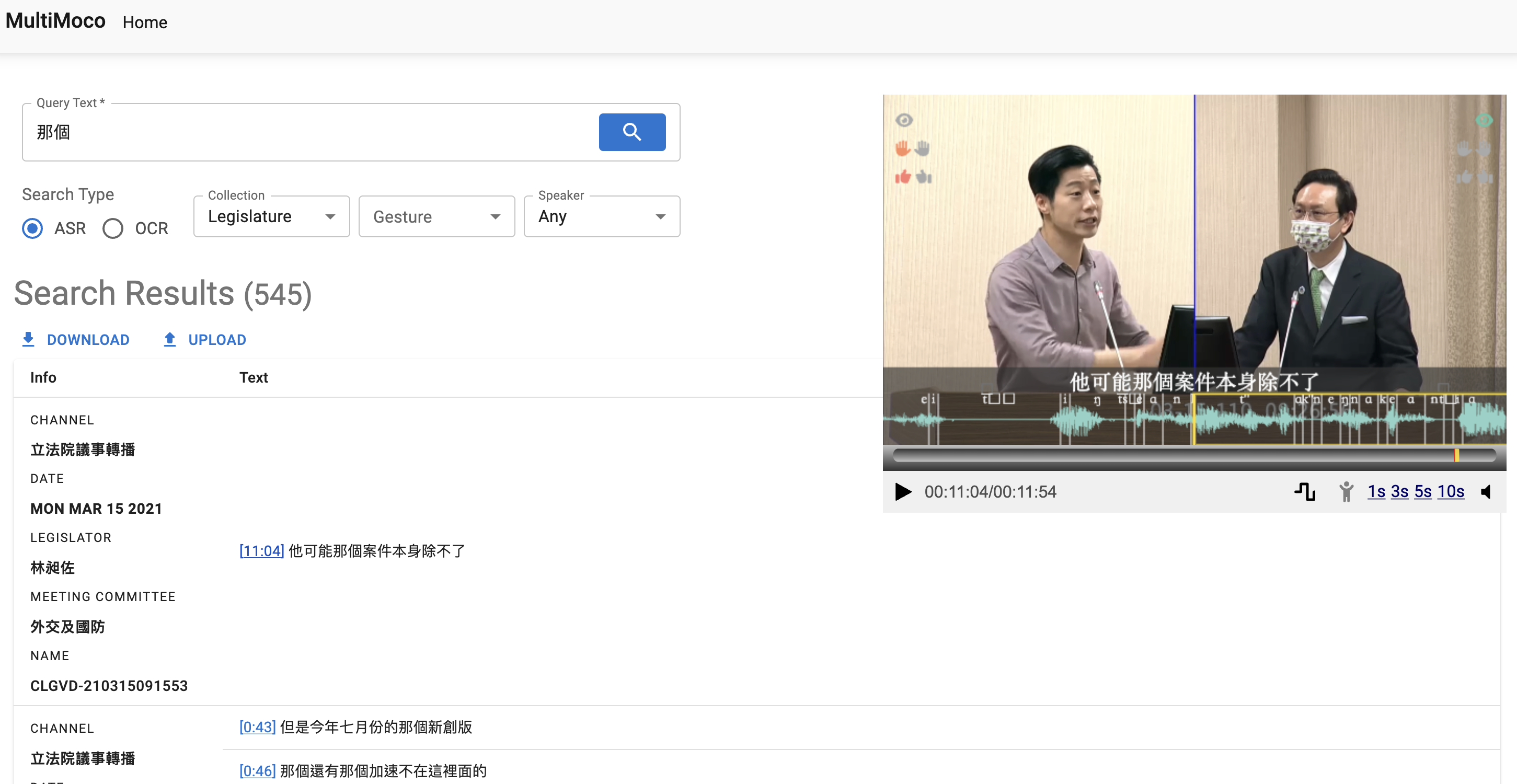

台灣多模態語料庫

回到我們要探究的問題

(Autoregressive) LLMs 與 LR/KR 的關係?

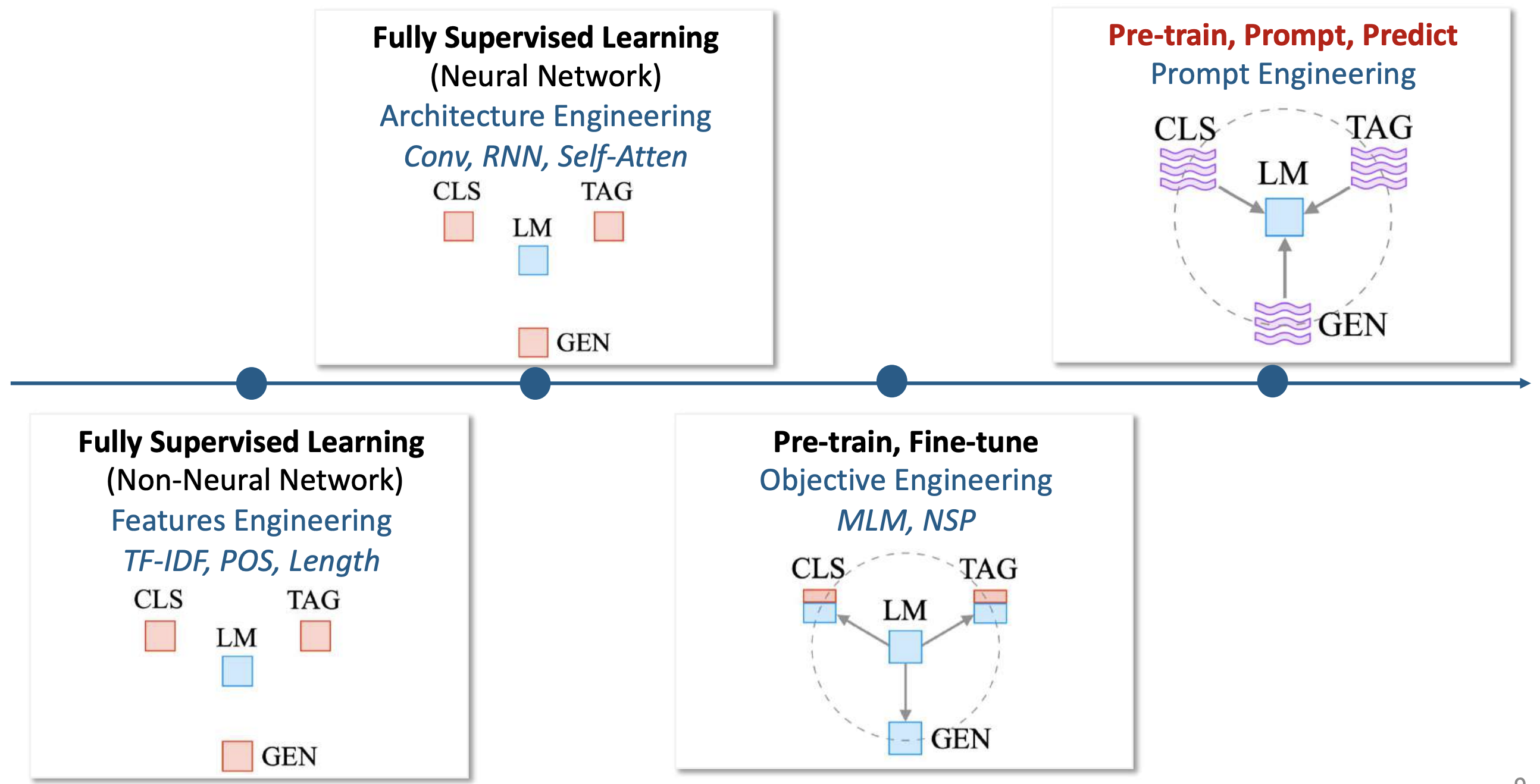

LLM-based NLP: a new paradigm

Pre-train, Prompt, and Predict (Liu et al. 2021)

Four paradims in NLP

In-context Learning

refers to methods for how to communicate with LLM to steer its behavior for desired outcomes without updating the model weights.

- Amazing performance with only zero/few-shot

- Requires no parameter updates, just talk to them in natural language!

- Prompt engineering: the process of creating a prompt that results in the most effective performance on the downstream task

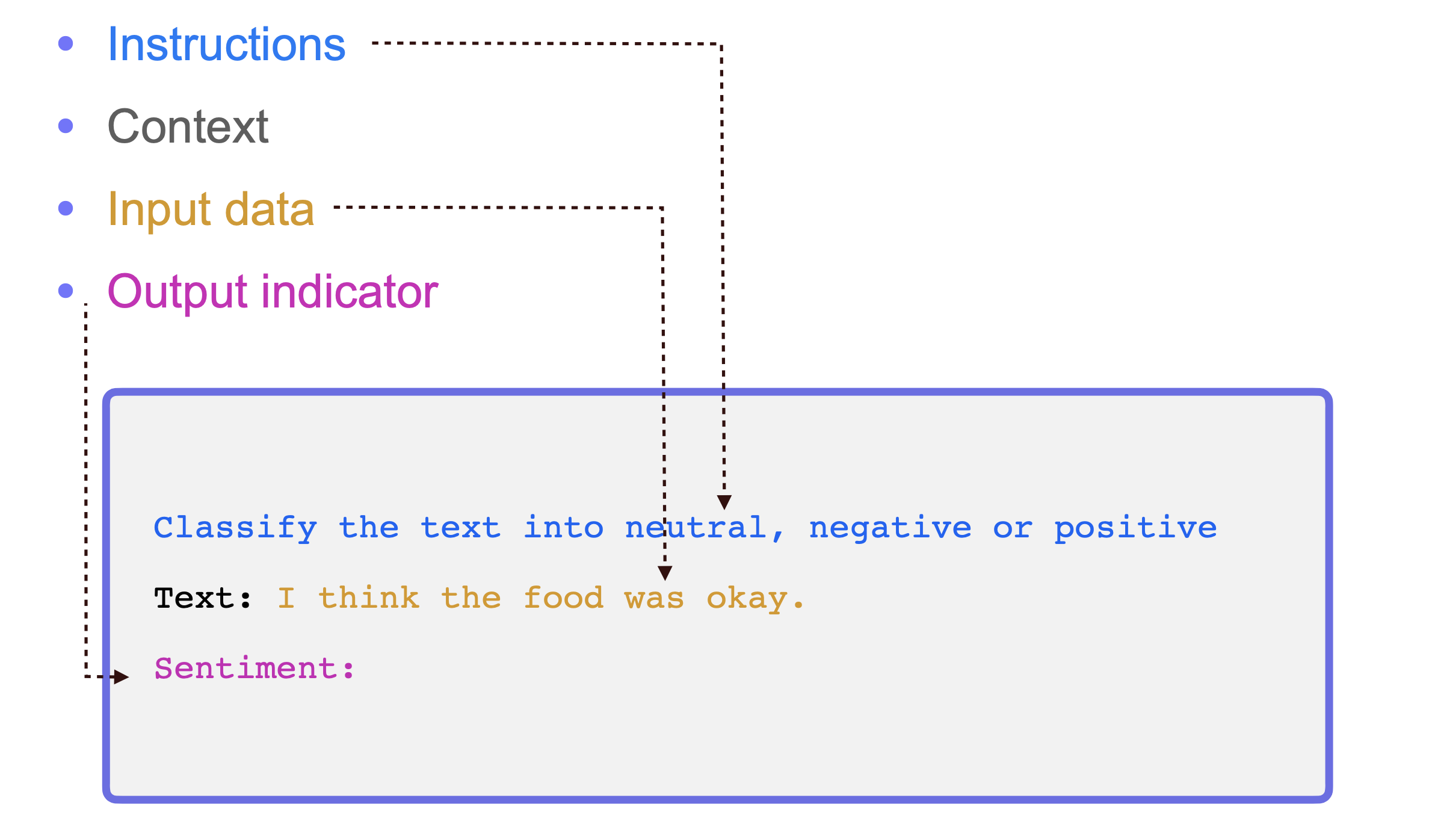

Prompt and Prompt engineering

Note

prompt: a natural language description of the task.

prompt design: involves instructions and context passed to the LLM to achieve a desired task.

prompt engineering: the practice of developing optimal (clear, concise, informative) prompts to efficiently use LLMs for a variety of applications.

(Text) Generation is a meta capability of Large Language Models & Prompt Engineering is the key to unlocking it.

Prompt and Prompt engineering

basic elements

Prompt and Prompt engineering

zero and few shot (Weng 2023) . . .

Zero shot learning: implies that a model can recognize things that have not explicitly been taught in the training.

Few shot learning: refers to training a model with minimal data.

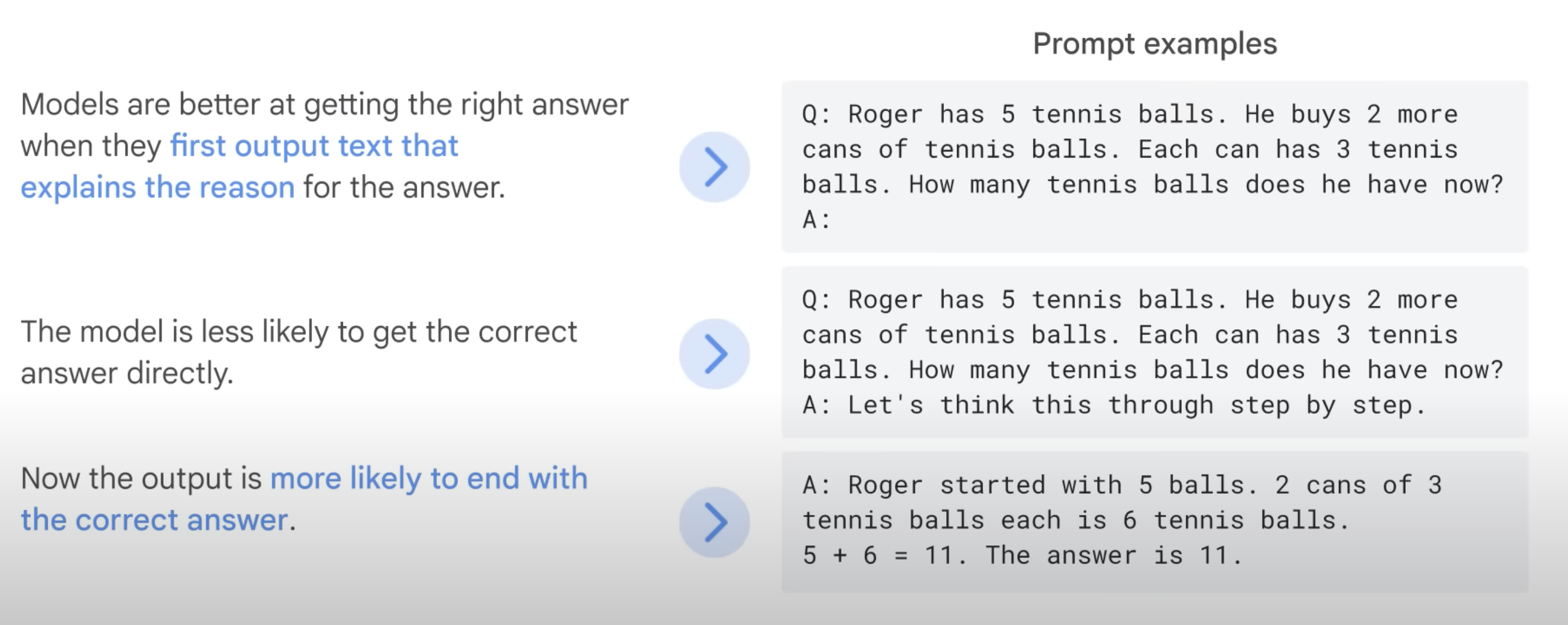

Prompt and Prompt engineering

Chain-of-Thought (Wei et al. 2023)

- generates a sequence of short sentences to describe reasoning logic step by step, known as reasoning chains or rationales, to eventually lead to the final answer.

- zero or few shot CoT

Prompt and Prompt engineering

Self-consistency sampling (Weng 2023)

- to sample multiple outputs with temperature > 0 and then selecting the best one out of these candidates. The criteria for selecting the best candidate can vary from task to task. A general solution is to pick majority vote.

新發展可以參見 Prompting Guide

Prompt and Prompt engineering

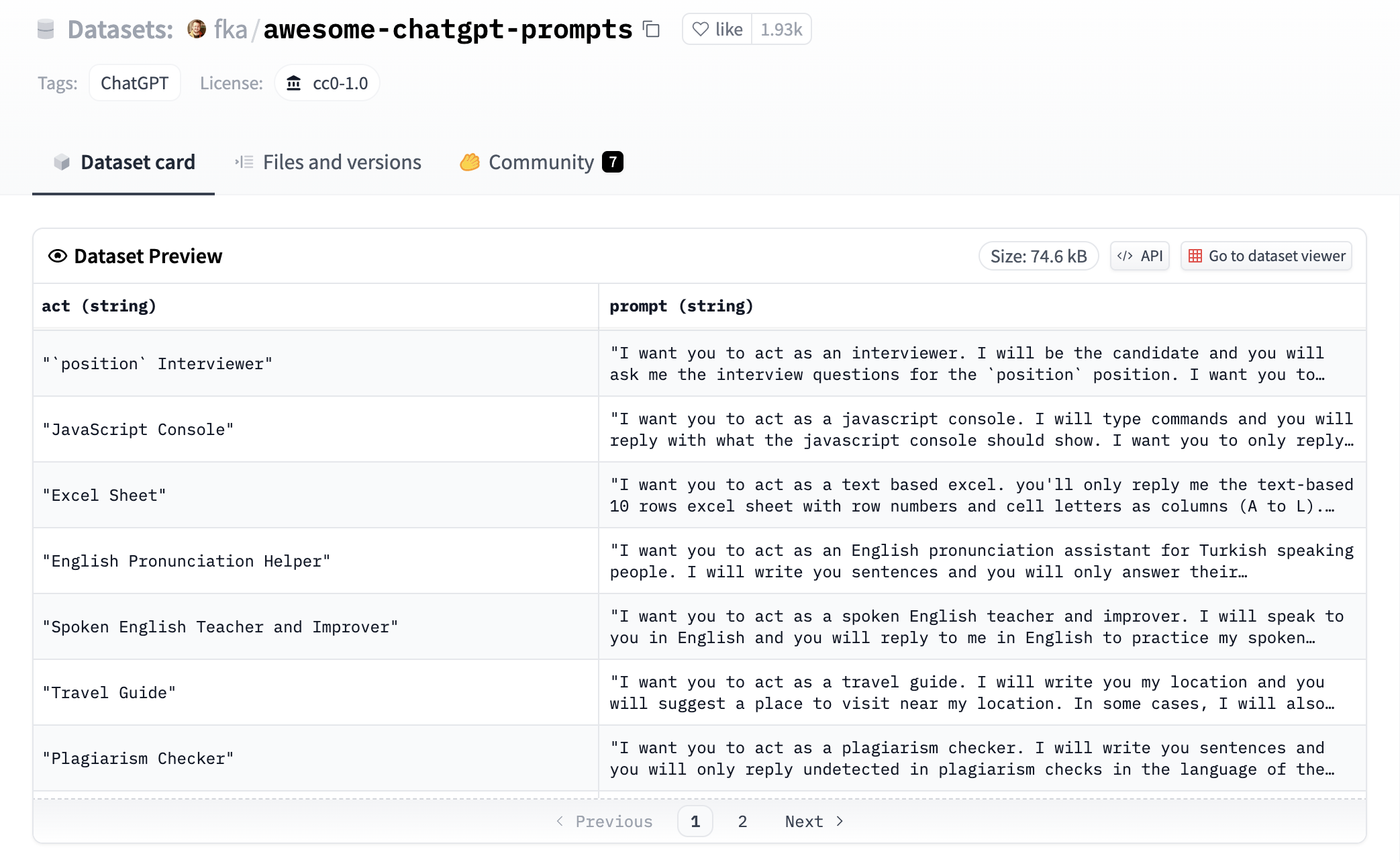

Persona setting is also important, socio-linguistically

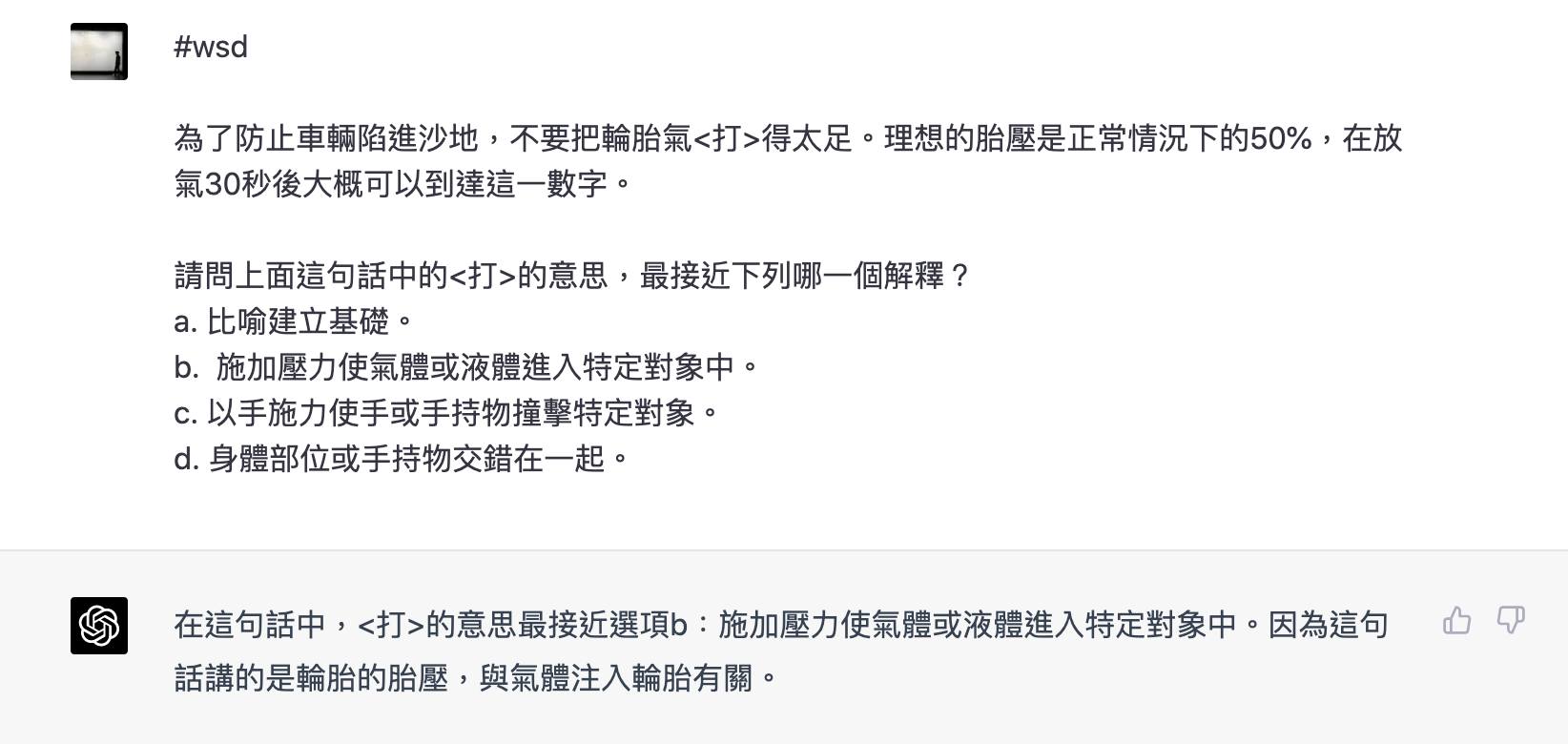

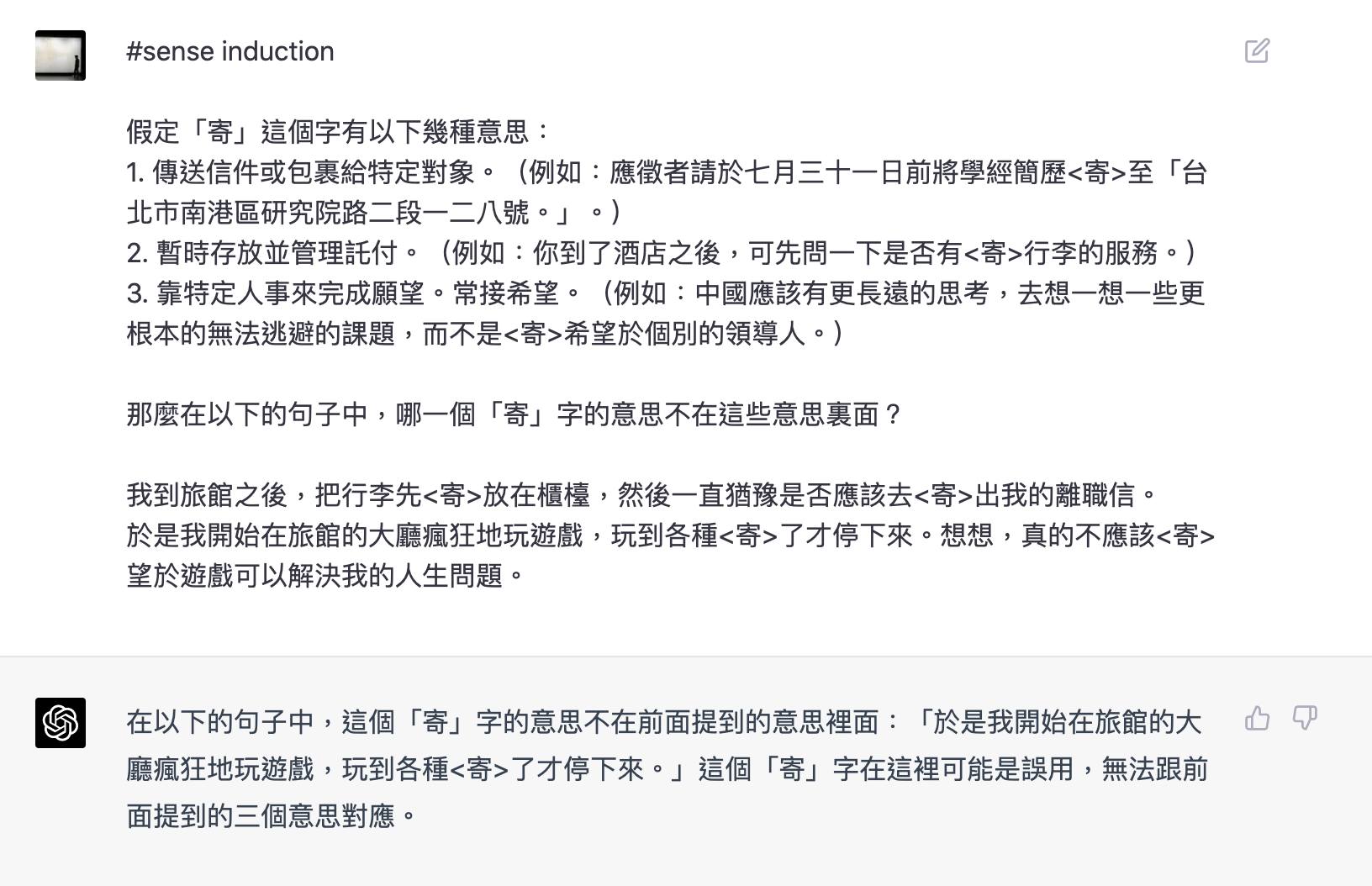

Prompting LLM for lexical semantic tasks

word sense disambiguation

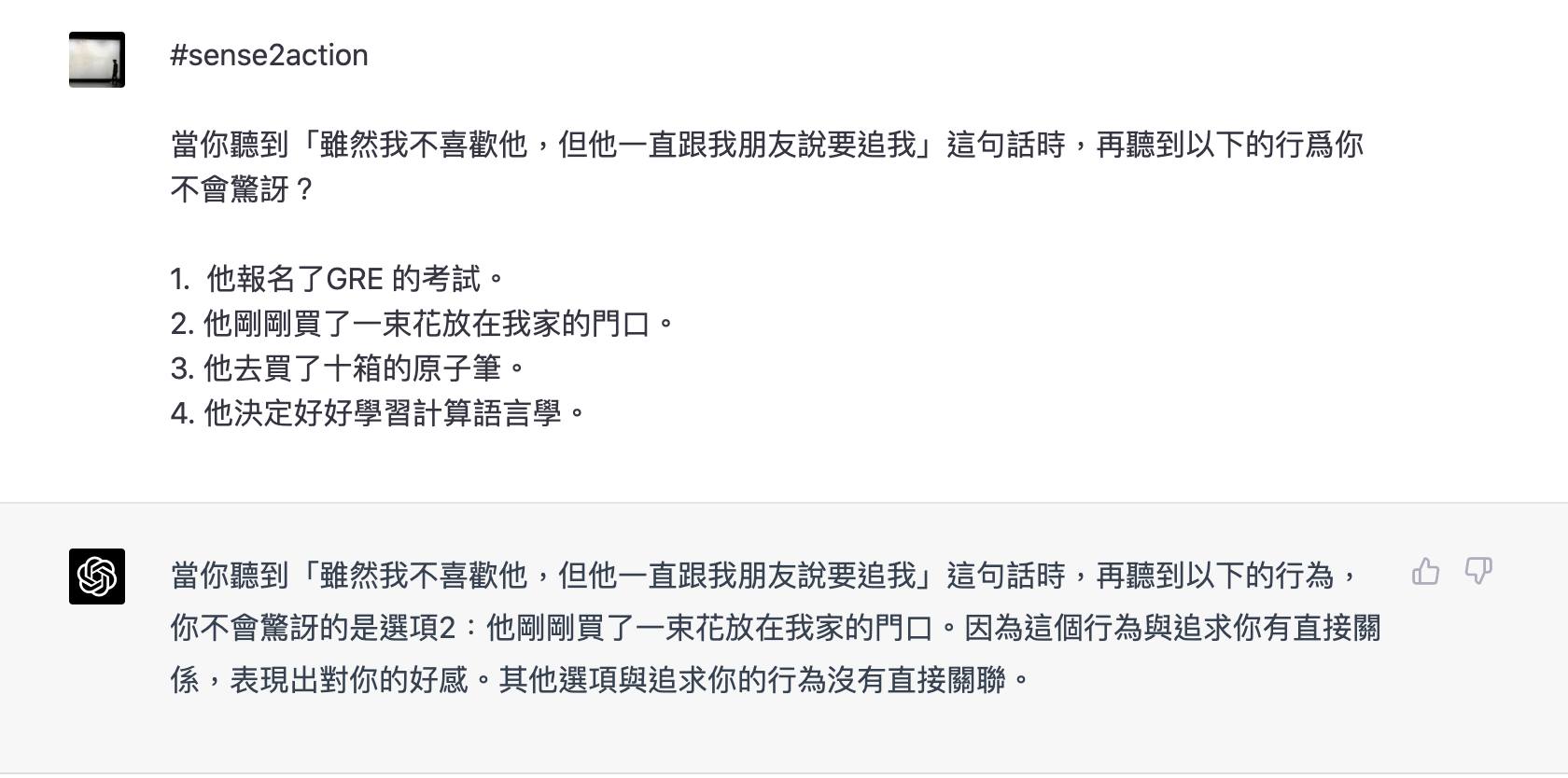

Prompting LLM for solve lexical semantic tasks

sense to action

Prompting LLM for solve lexical semantic tasks

word sense induction

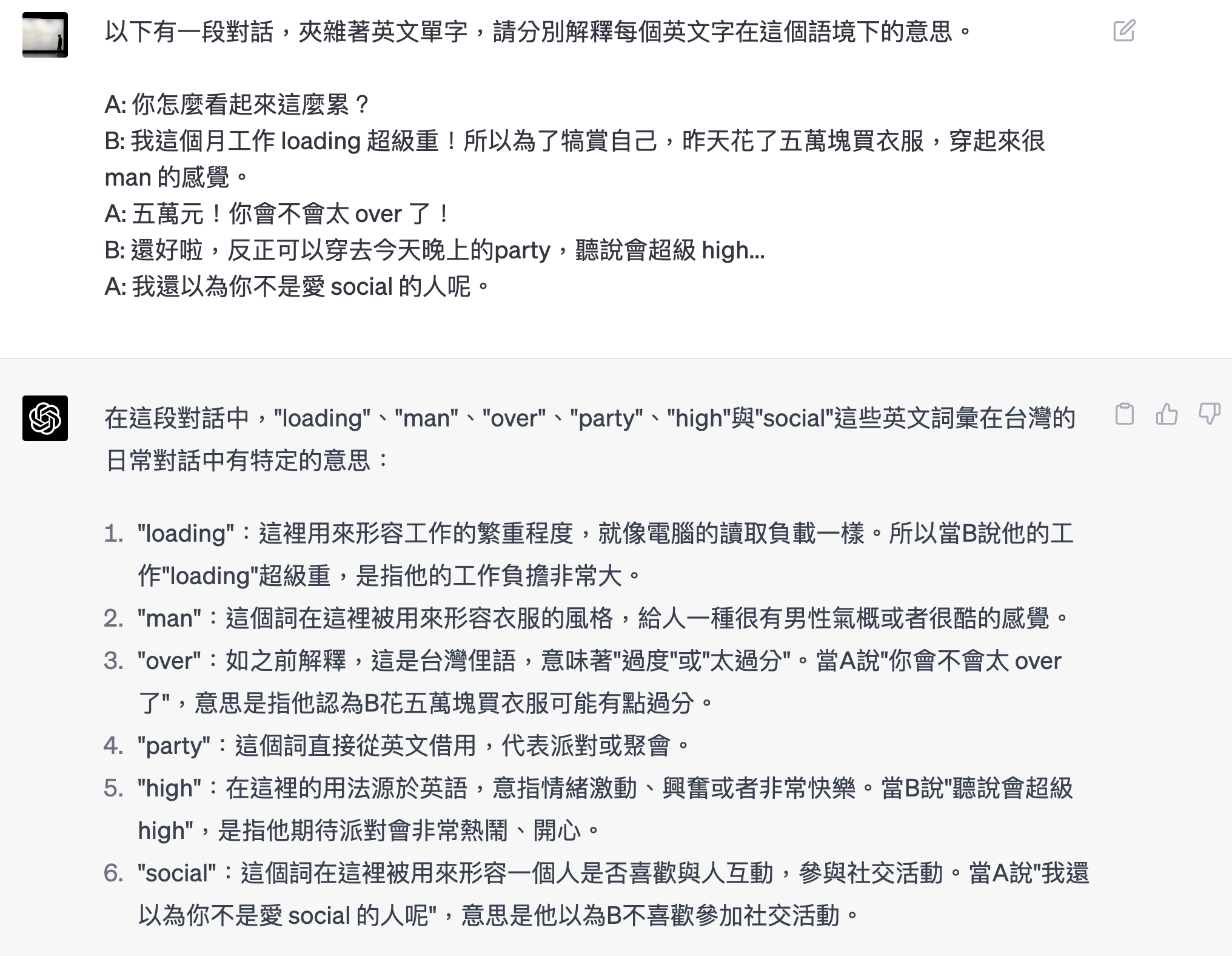

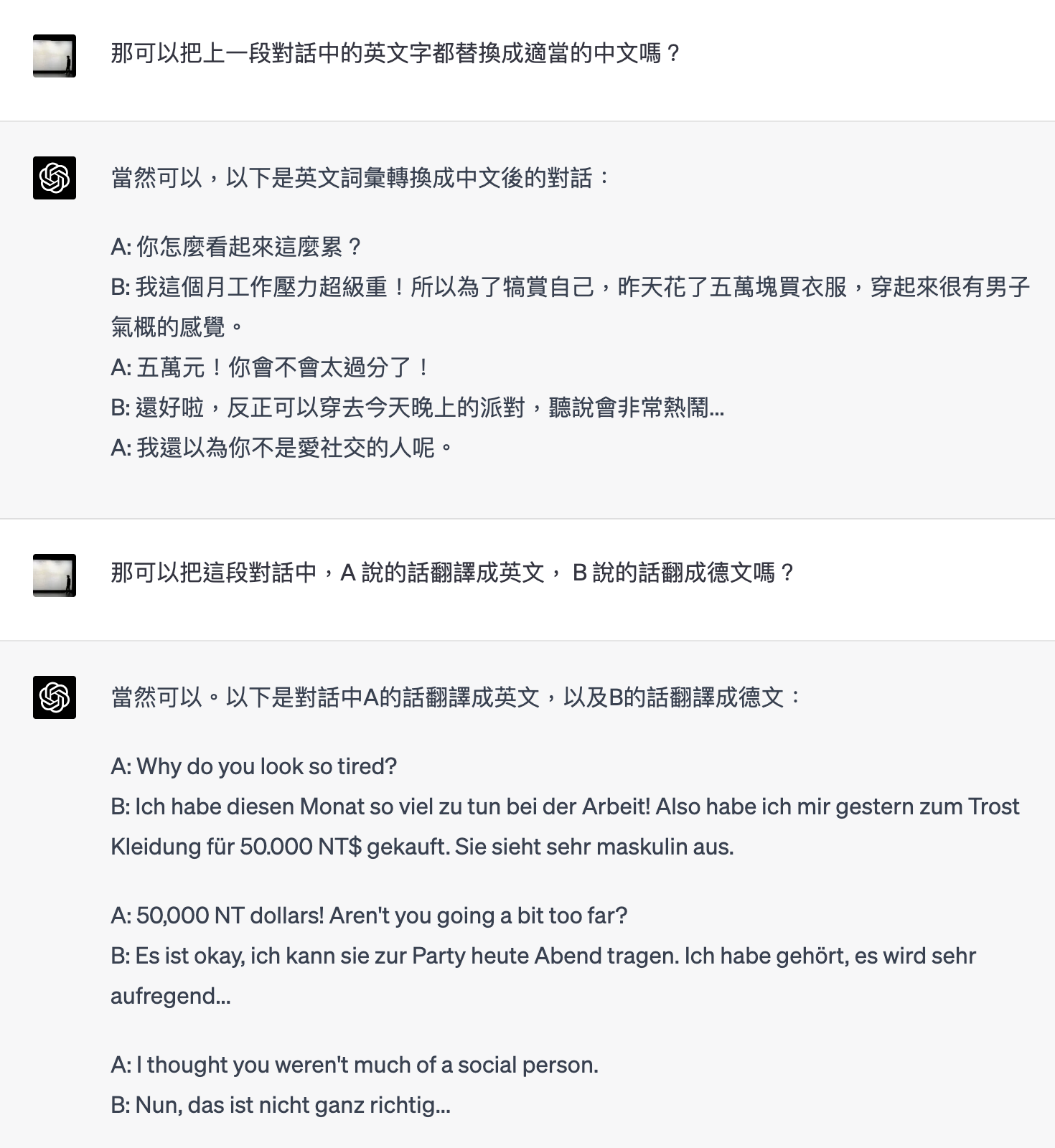

Prompting LLM for solve lexical semantic tasks

code-switching wsd

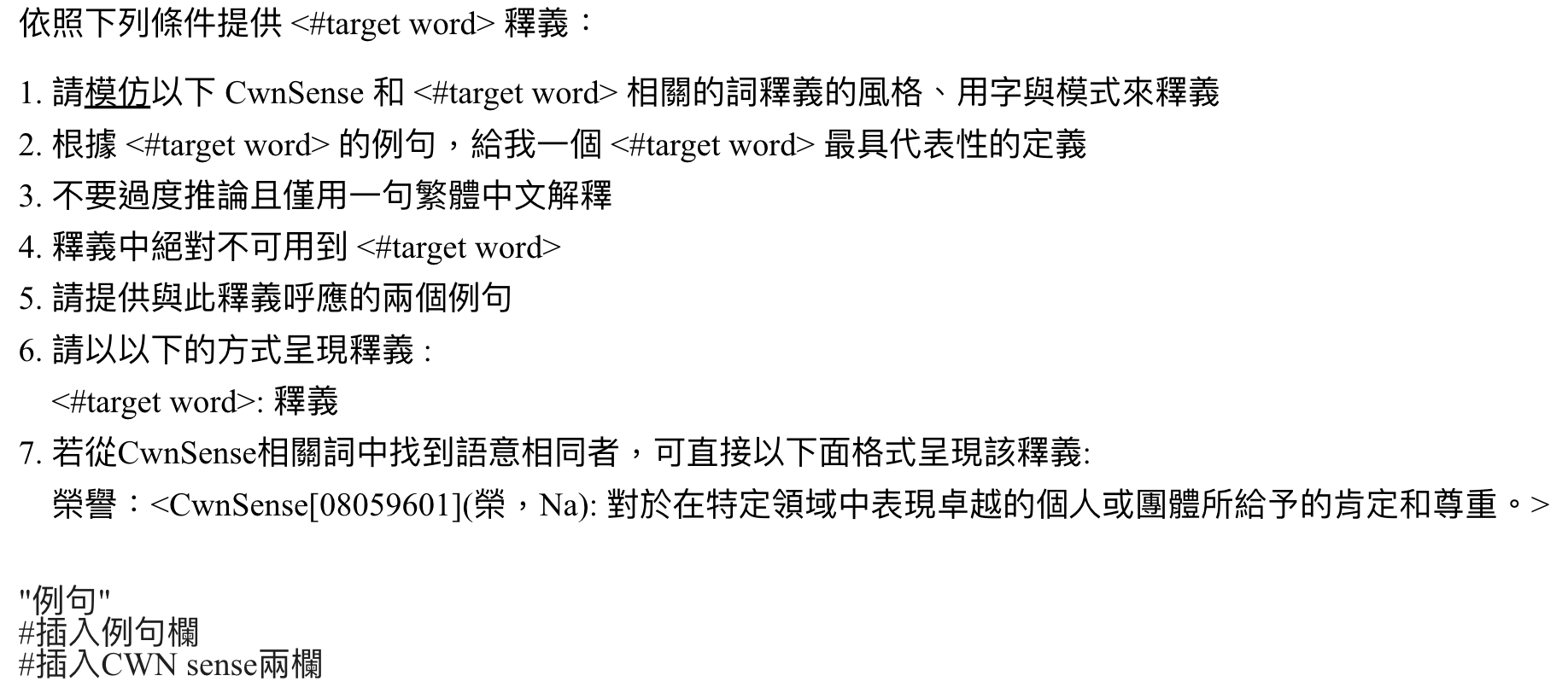

Prompting LLM for Wordnet data augmentation

Exploratory Prompting Analysis

- Prompting LLM becomes an empirical work and the effect of prompt engineering methods can vary a lot among models and tasks, thus requiring heavy experimentation and heuristics.

- After exploring different prompt templates, we take a prompt template with bullet points, a sequence of instructions, and a guided inquiring style to be complied with, with the persona setting as ““You are a linguist mastering in lexical semantics and in constructing Chinese Wordnet.”

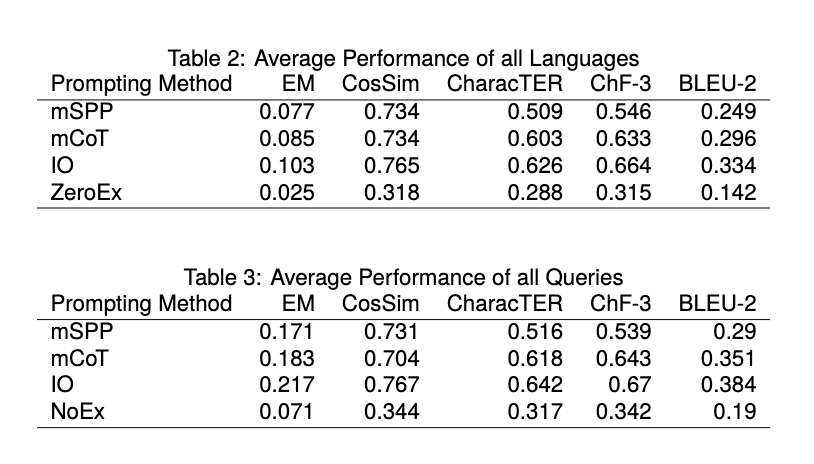

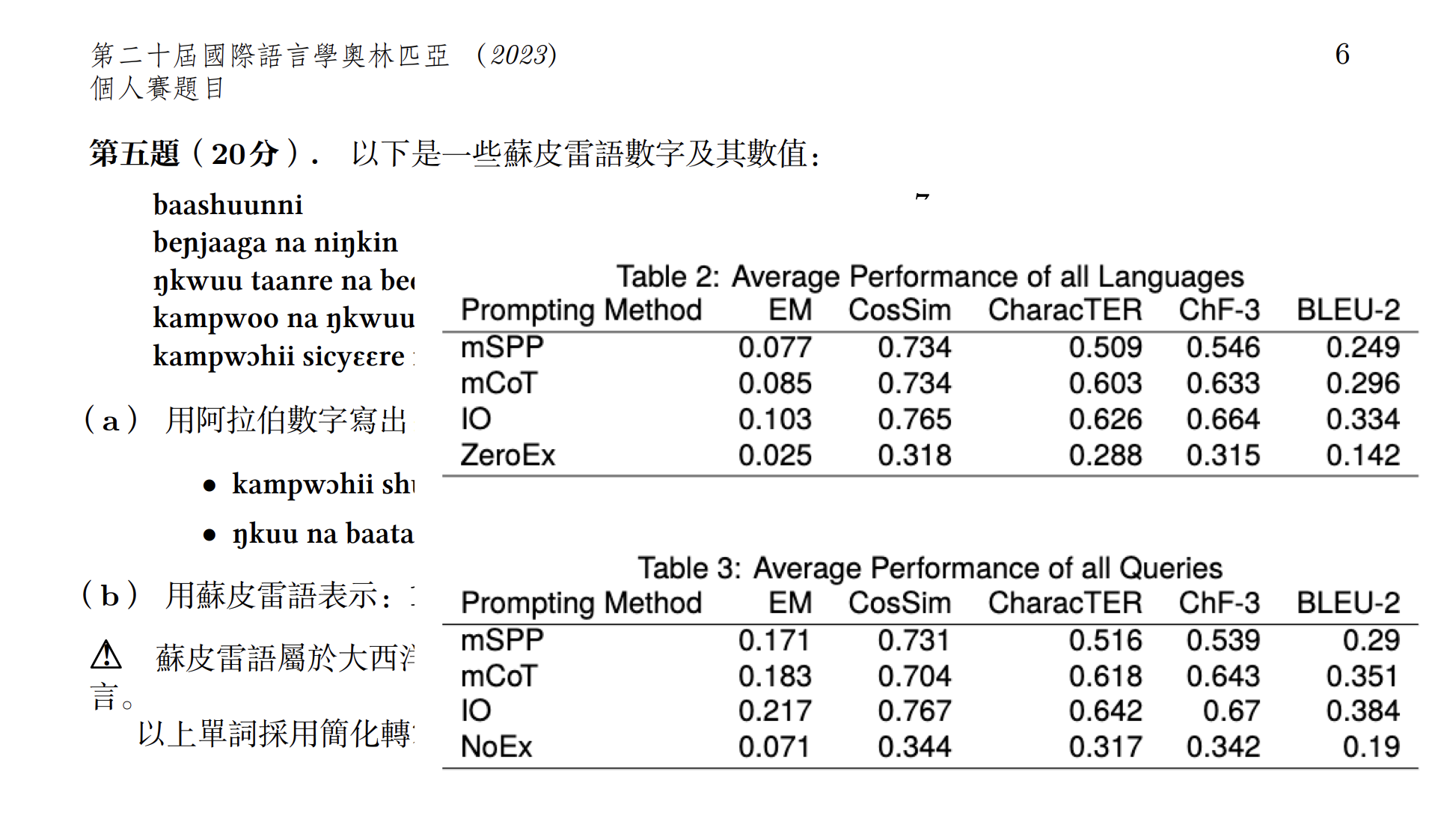

Results

instruction tuning

Human ratings

Human rating is based on the word’s appropriateness of interpretation, the meanings’ correspondence to the word’s part of speech, their avoidance of oversimplification/overgeneralization, and their compliance with the prompt’s requirements.

The top 600 frequent words are rated to further analyze their error types.

Prompting limitations

想辦法問博學者 savant 的各種技能,也會有天花板

In-context learning (~ prompting)involves providing input to the language model in a specific format to elicit the desired output.

- 提示詞脈絡視窗大小

context window sizerestricts the model’s ability to process long sequences of information effectively.

各種幻覺與執著

Hallucinationappears when the generated content is nonsensical or unfaithful to the provided source content.reluctance to express uncertainty, to change premise, and yields to authority

incapable of accessing factual/up-to-date information; or no authentic sources provided

- 數據也有可能受到版權、個資與企業隱私問題。

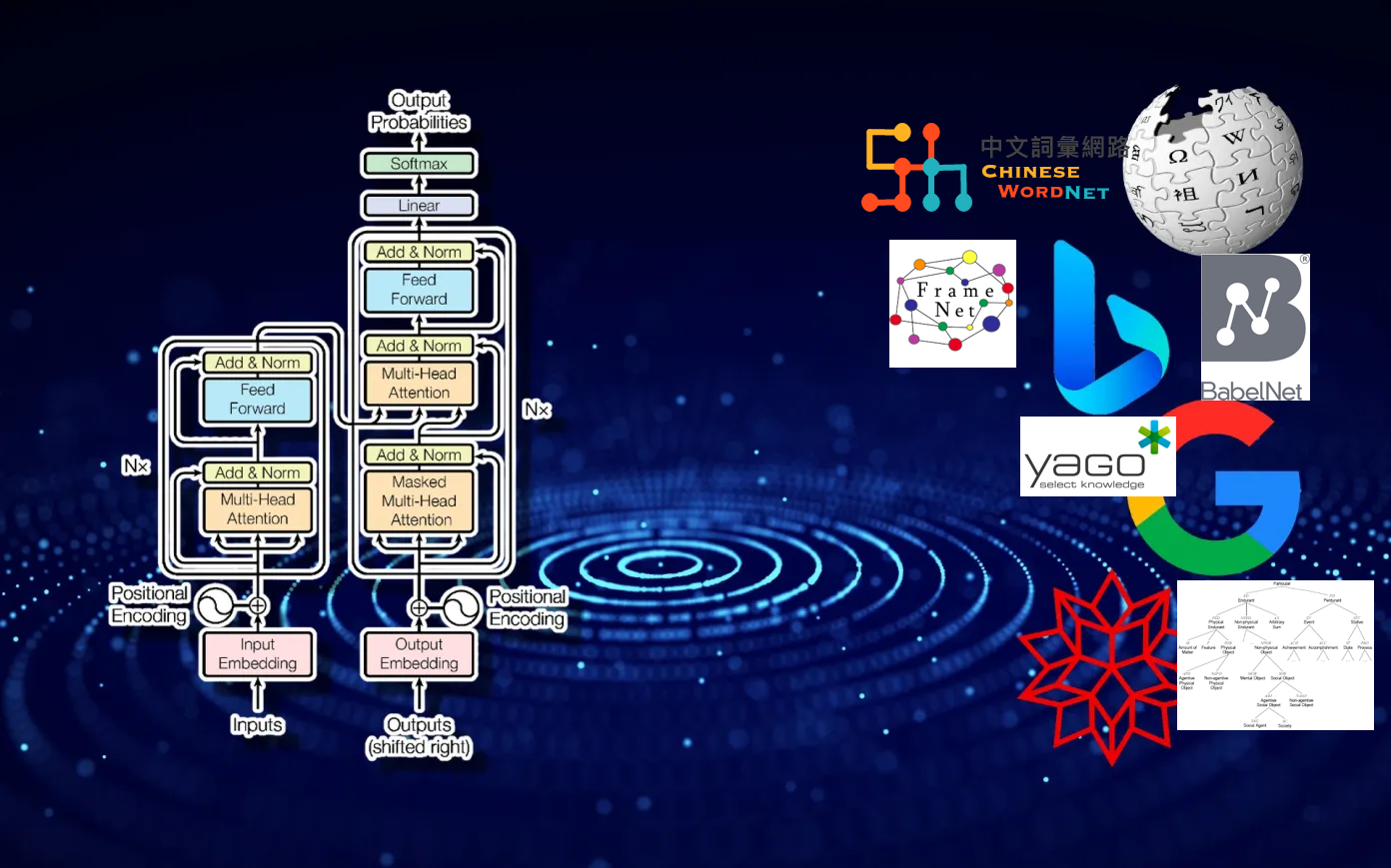

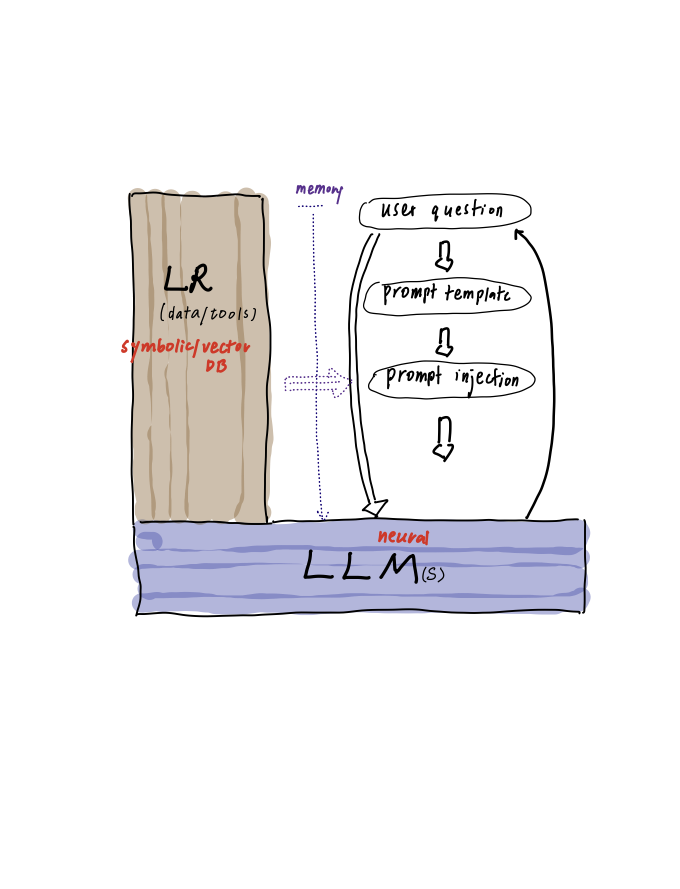

A neural-symbolic approach to rebuild the LR ecosystem

Toward a more linguistic knowledge-aware LLMs

- The neural-symbolic approach seeks to integrate these two paradigms to leverage the strengths of both: the learning and generalization capabilities of neural networks and the interpretability and reasoning capabilities of symbolic systems.

目前兩種作法

- Fine-tuning on up-to-dated / customized data

- Retrieval-augmented Generation (e.g., RAG prompting)

Fine-tune

模型的壓縮技術 quantization (LoRA, QLoRA, …) 使得微調大型語言模型變得更為可行

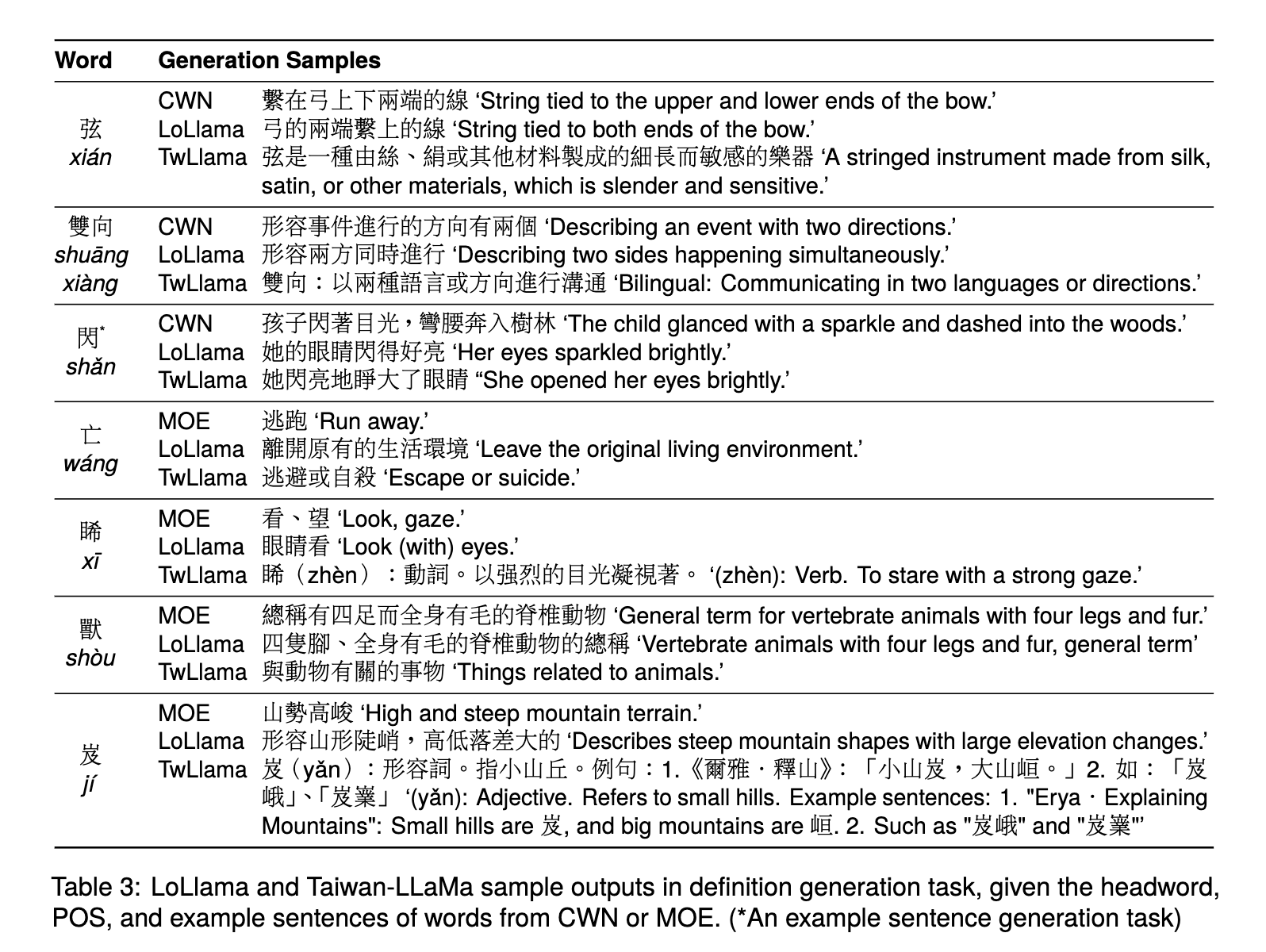

LoLlama: a fine-tuned model

- We fine-tune

LoLlamaon top of Taiwan-LLaMa (Lin and Chen, 2023), which was pre-trained on over 5 billion tokens of Traditional Chinese. The model was further fine-tuned on over 490K multi-turn con- versational data to enable instruction-following and context-aware responses. - We train LoLlama with CWN

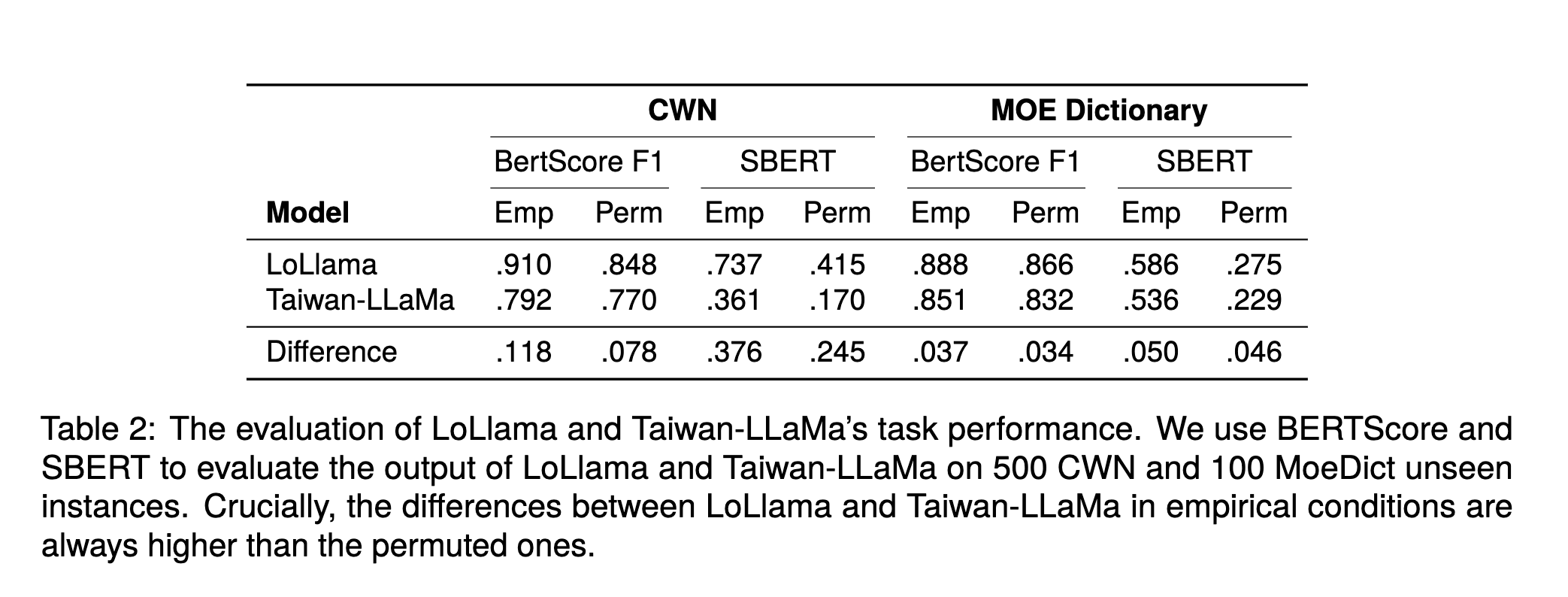

Evaluation

Evaluation

微調的問題與限制

商業版本好,但很貴,也不保證安全。

開源的 llm 越來越好,壓縮技術越見成熟。但訓練不便宜,結果常動輒被政治價值審查。

(抱怨:又要馬兒跑,又要馬兒沒草吃)

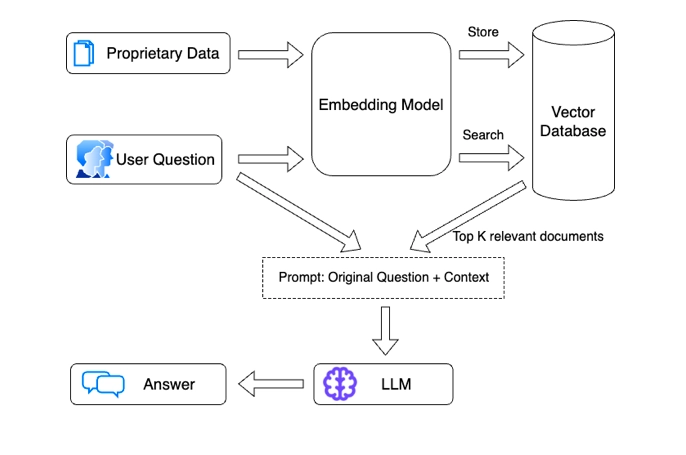

RAG

Retrieving facts from an external knowledge base to ground large language models (LLMs) on the most accurate, up-to-date information and to give users insight into LLMs’ generative process.

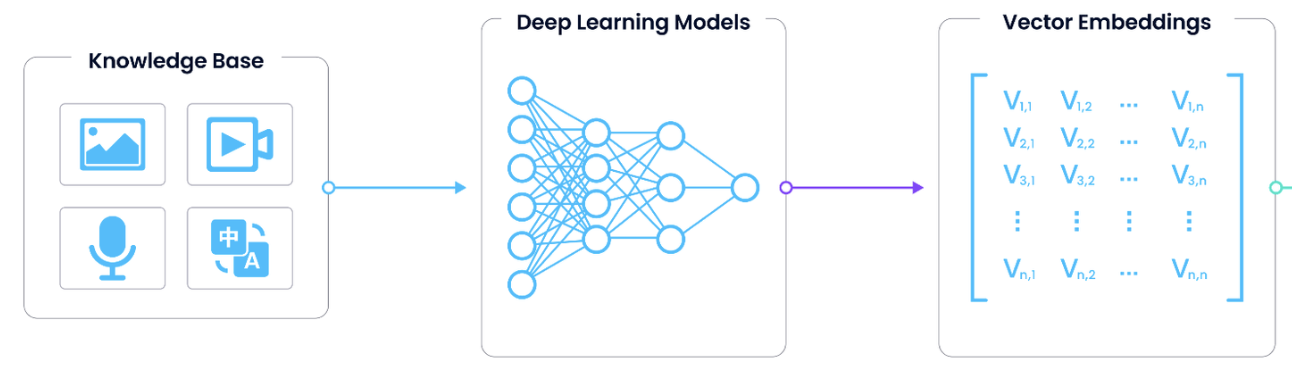

Vector DataBase and Embeddings

- A (vector) embedding is the internal representation of input data in a deep learning model, also known as embedding models or a deep neural network.

We obtain vectors by removing the last layer and taking the output from the second-to-last layer.

Vector DB/ Vector Stores

Workflow

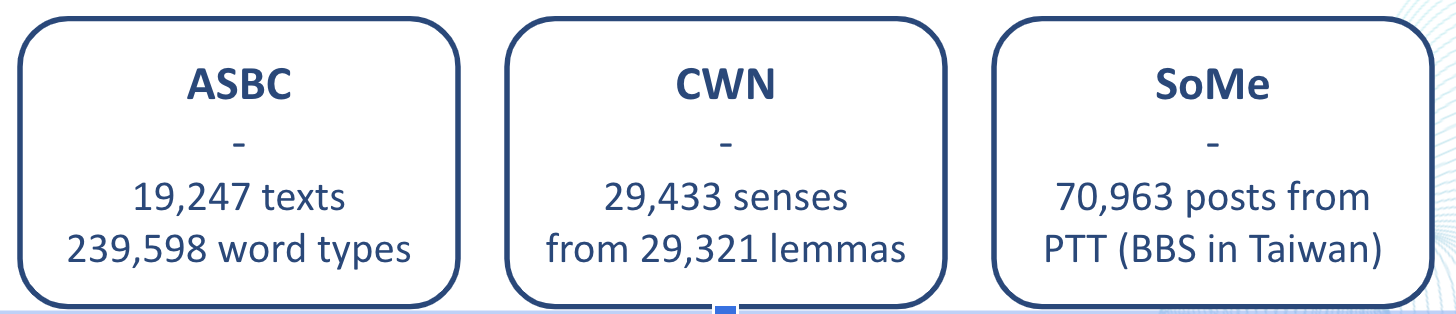

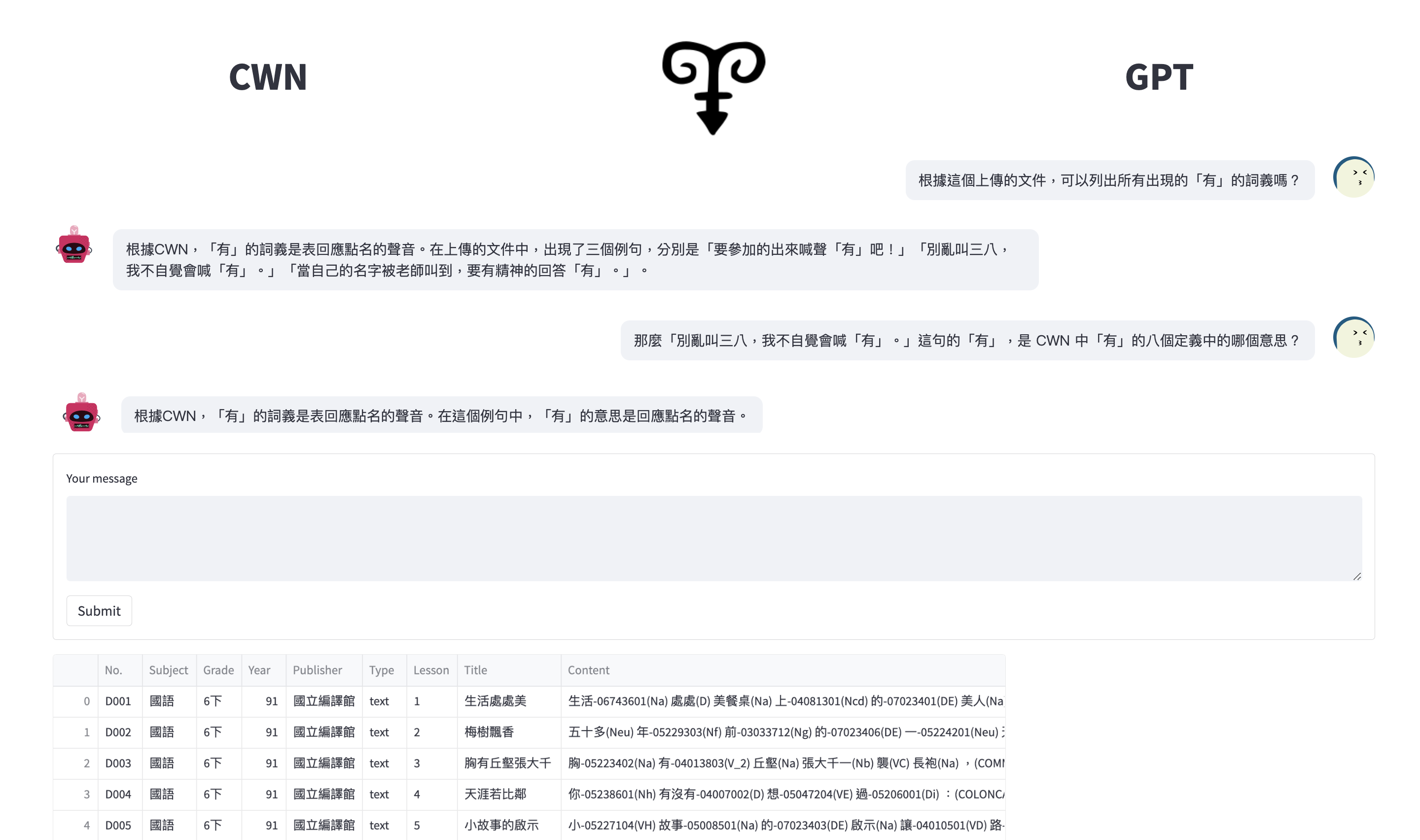

lopeGPT: a RAG model

a higer archtecture

- Integration of language resources:

- Academia Sinica Balanced Corpus of Modern Chinese (ASBC)

- Social Media Corpus in Taiwan (SoMe)

- Chinese Wordnet 2.0 (CWN)

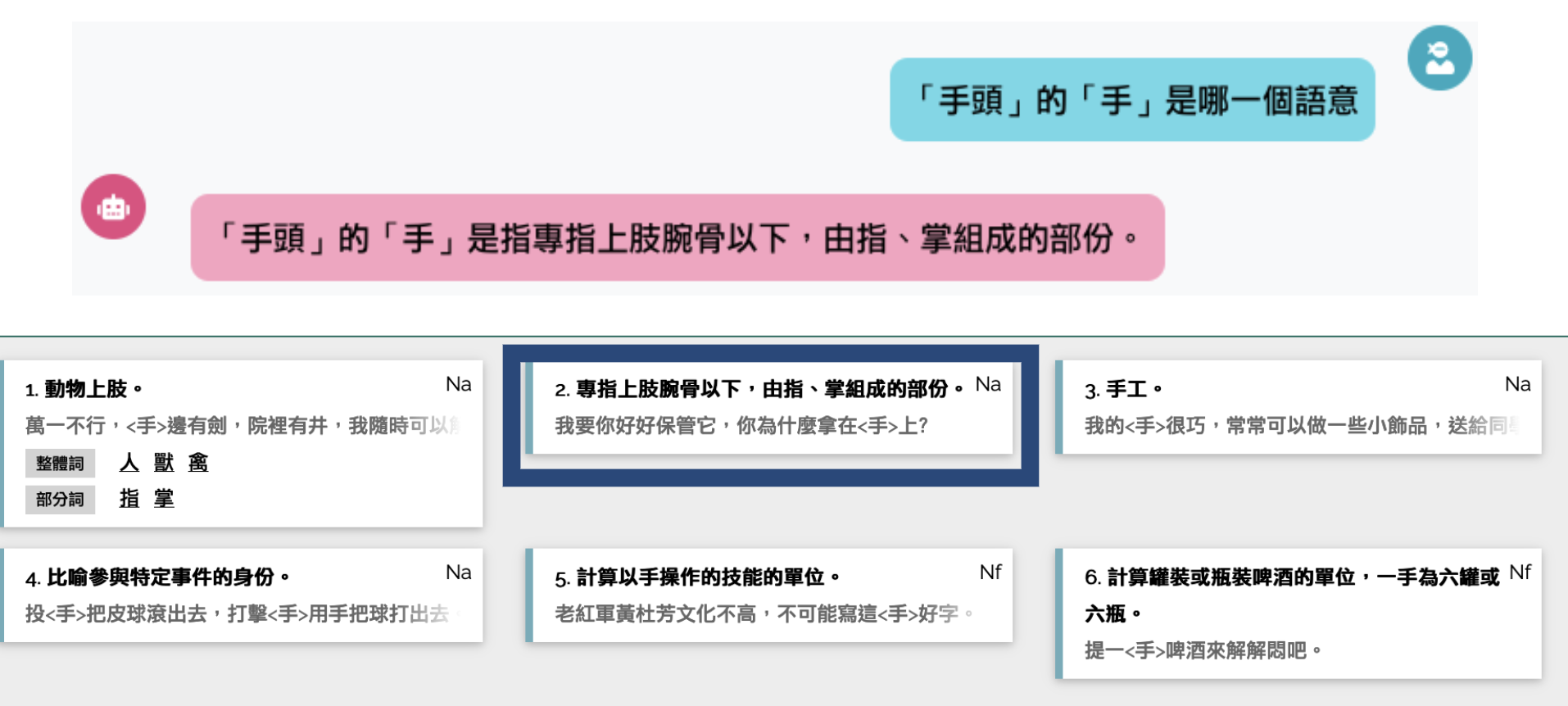

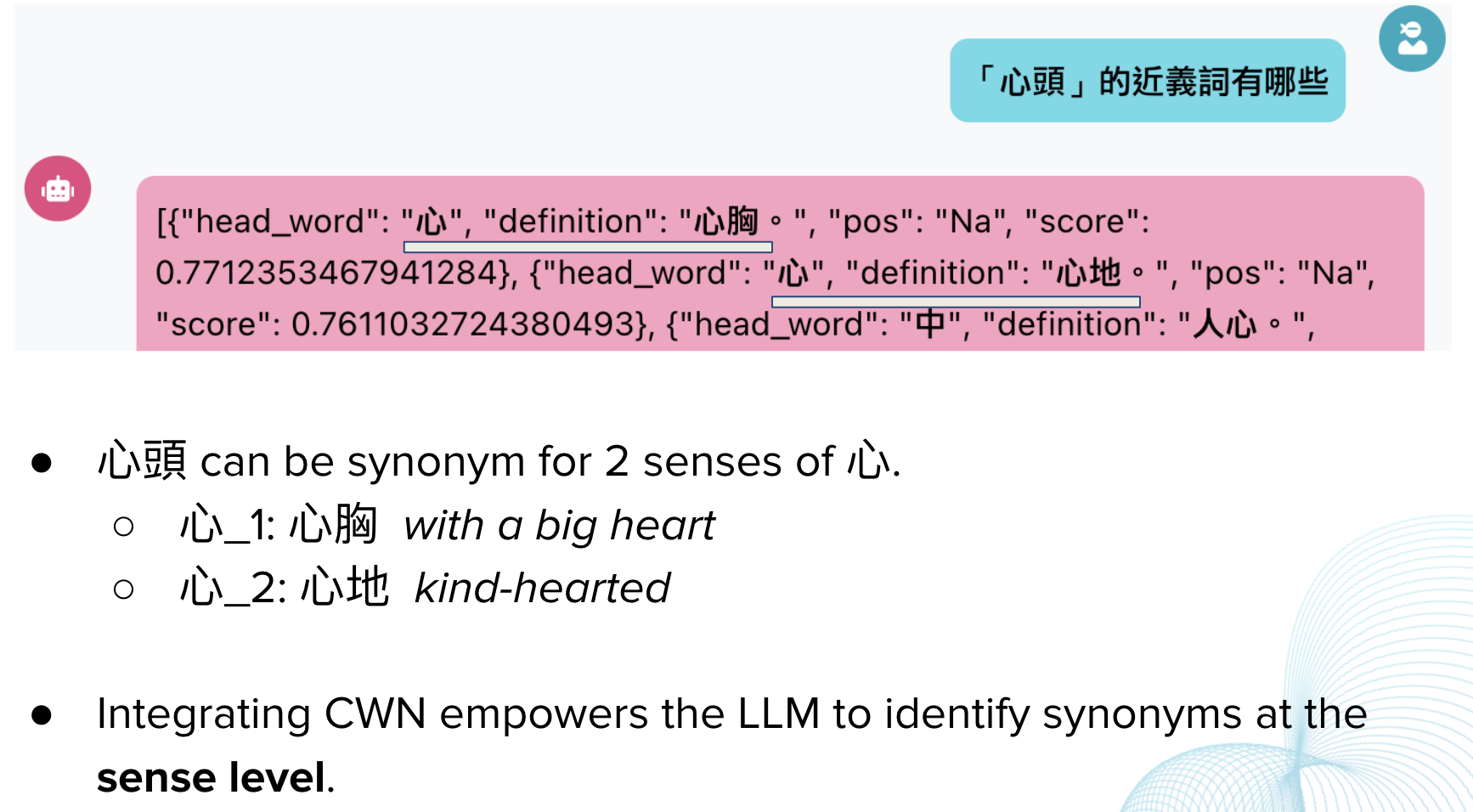

Experiments on Sense Computing Tasks

augmented LLMs

- sense definition, lexical relation query and processing

- sense tagging and analysis

Experiments on Sense computing tasks

localized and customized

upload data to calculate (word frequency, word sense frequency, etc) via

llama-indexdata loader.vectorized the data and semantically search/compare

given few shot, predict the sense and automatically generate the gloss (and relations to others)

Note

All examples are tested with chatgpt-3.5-turbo (using OpenAI’s API) . It uses the default configurations, i.e., temperature=0.0.

Some prelimenary results

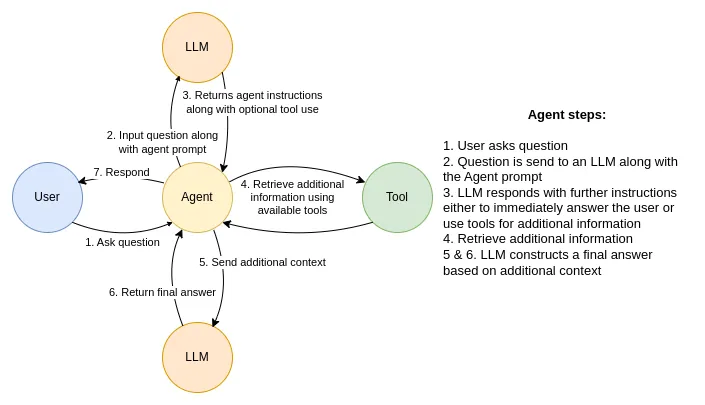

LLMs orchestration

Orchestration frameworks provide a way to manage and control LLMs.

需要一名專案經理,協調所有進行中的工作項目,確保各專案達成理想的最終結果

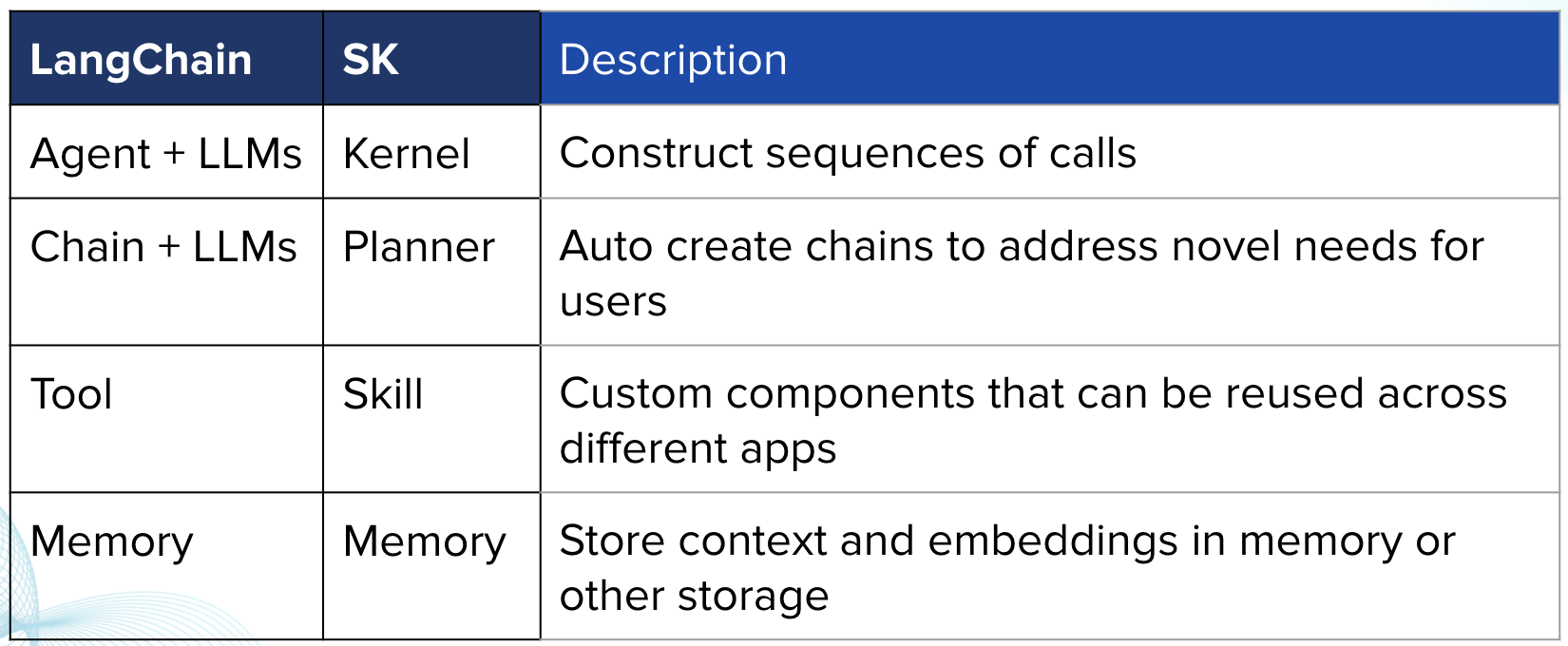

LLMs orchestration frameworks

LlamaIndex

Semantic Kernel

LangChain

Comparison

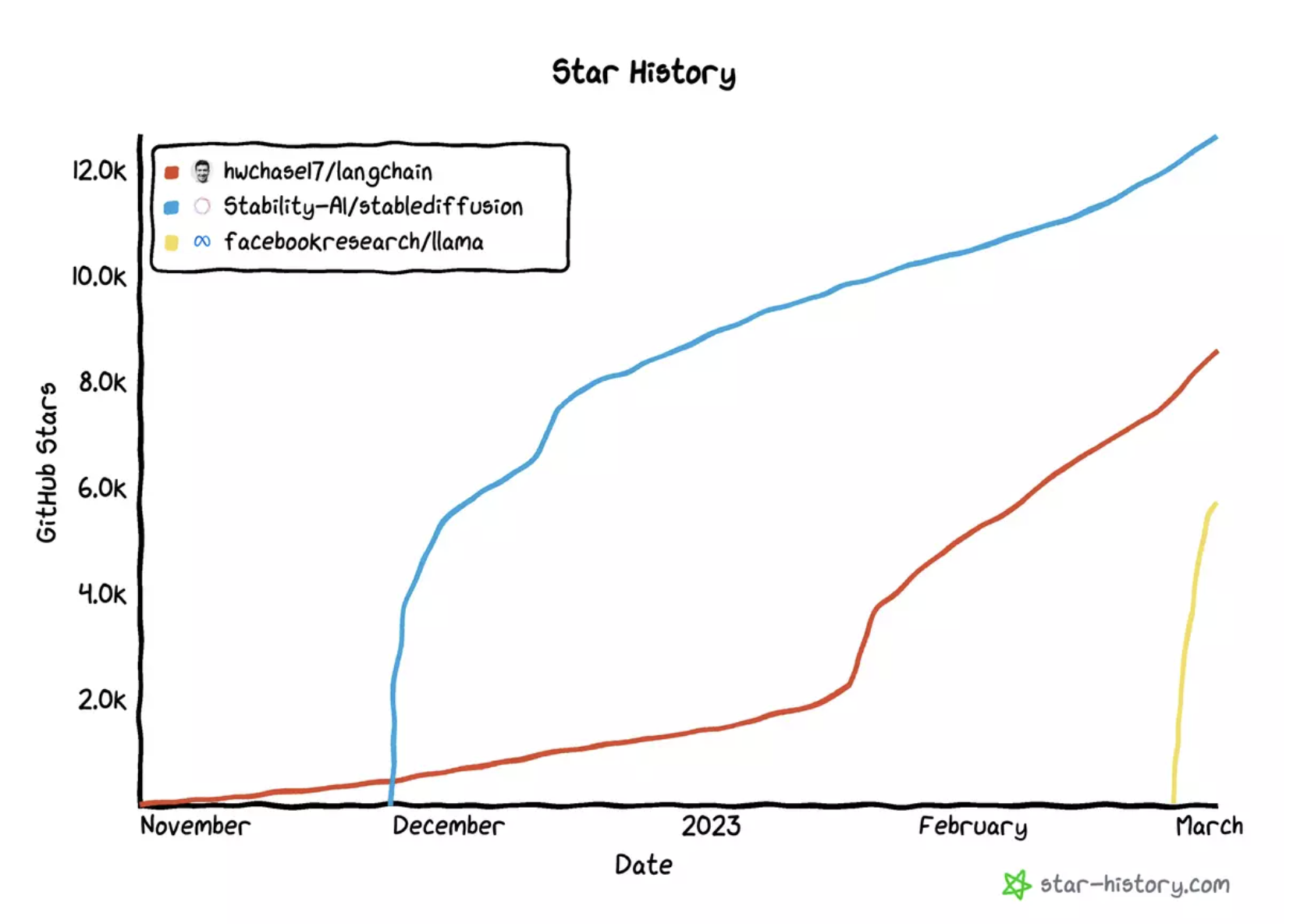

Langchain

langchainis an open source framework that allows AI developers to combine LLMs like GPT-4 with external sources of computation and data.

Github repo star history

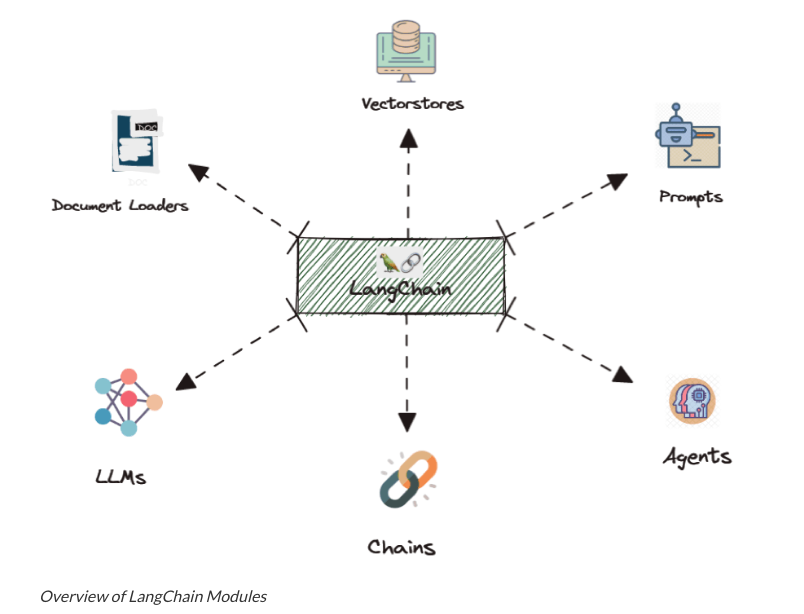

Architecture

langchain components

Chains: The core of

langchain. Components (and even other chains) can be stringed together to create chains.Prompt templates: Prompt templates are templates for different types of prompts. Like “chatbot” style templates, ELI5 question-answering, etc

LLMs: Large language models

Indexing Utils: Ways to interact with specific data (embeddings, vectorstores, document loaders)

Tools: Ways to interact with the outside world (search, calculators, etc)

Agents: Agents use LLMs to decide what actions should be taken. Tools like web search or calculators can be used, and all are packaged into a logical loop of operations.

Memory: Short-term memory, long-term memory.

Working with LLMs and your own data

- Good news for Language and Knowledge Resource developers

總結

- 語言與知識資源與模型訓練一樣重要:可信任、可解釋、可克制(與客製)。

- 兩者連結的可能目前是 Fine-tune 與 RAG prompting。

- 了解 orchestration 的架構 (e.g.,

langchain) 對於部署 LLM 應用變成核心技能。

Reference

Hands-on coding tutorial