Text Generation Metrics

- BLEU (Bilingual Evaluation Understudy)

- ROUGE (Recall-Oriented Understudy for Gisting Evaluation)

- METEOR (Metric for Evaluation of Translation with Explicit ORdering)

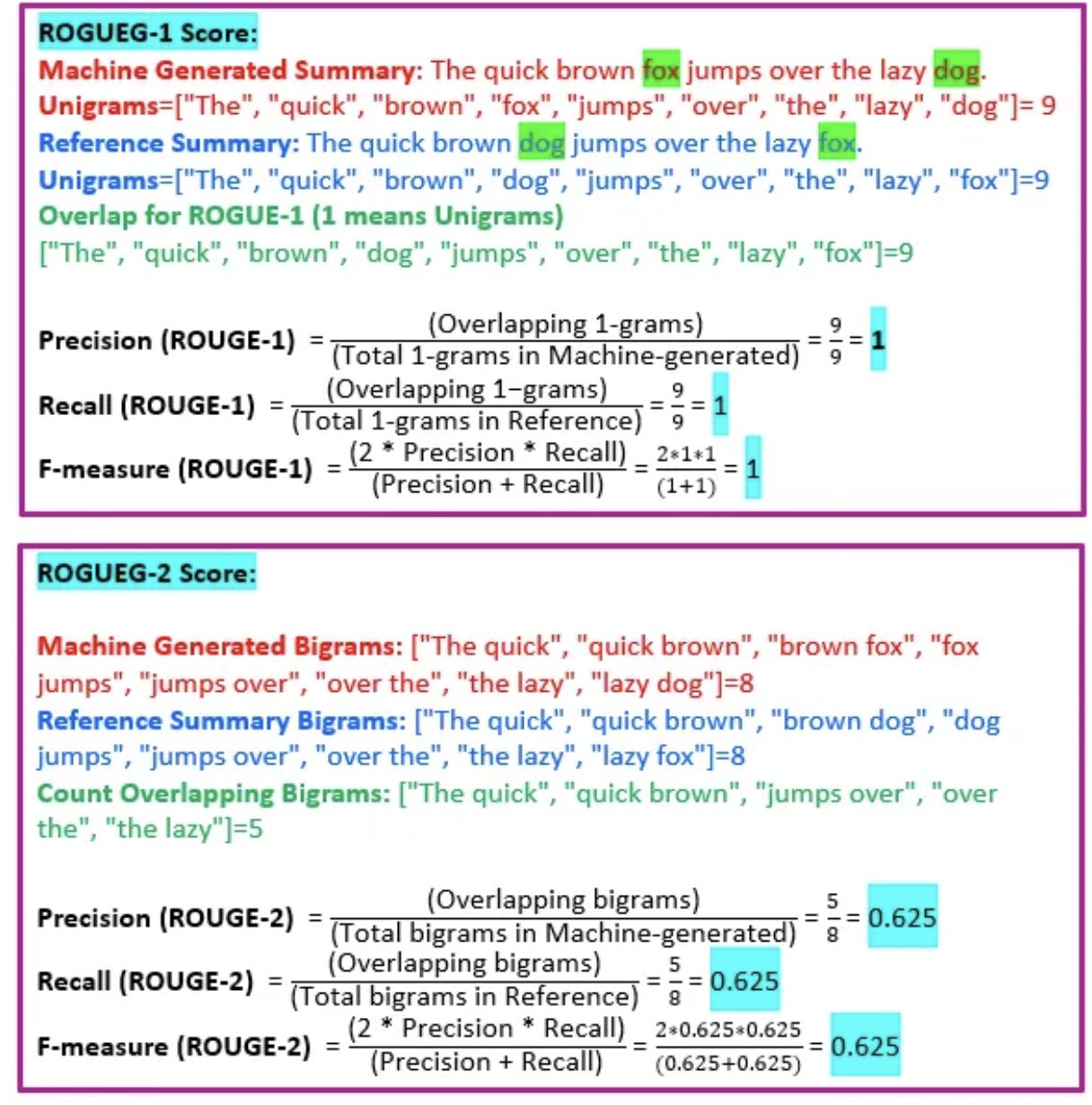

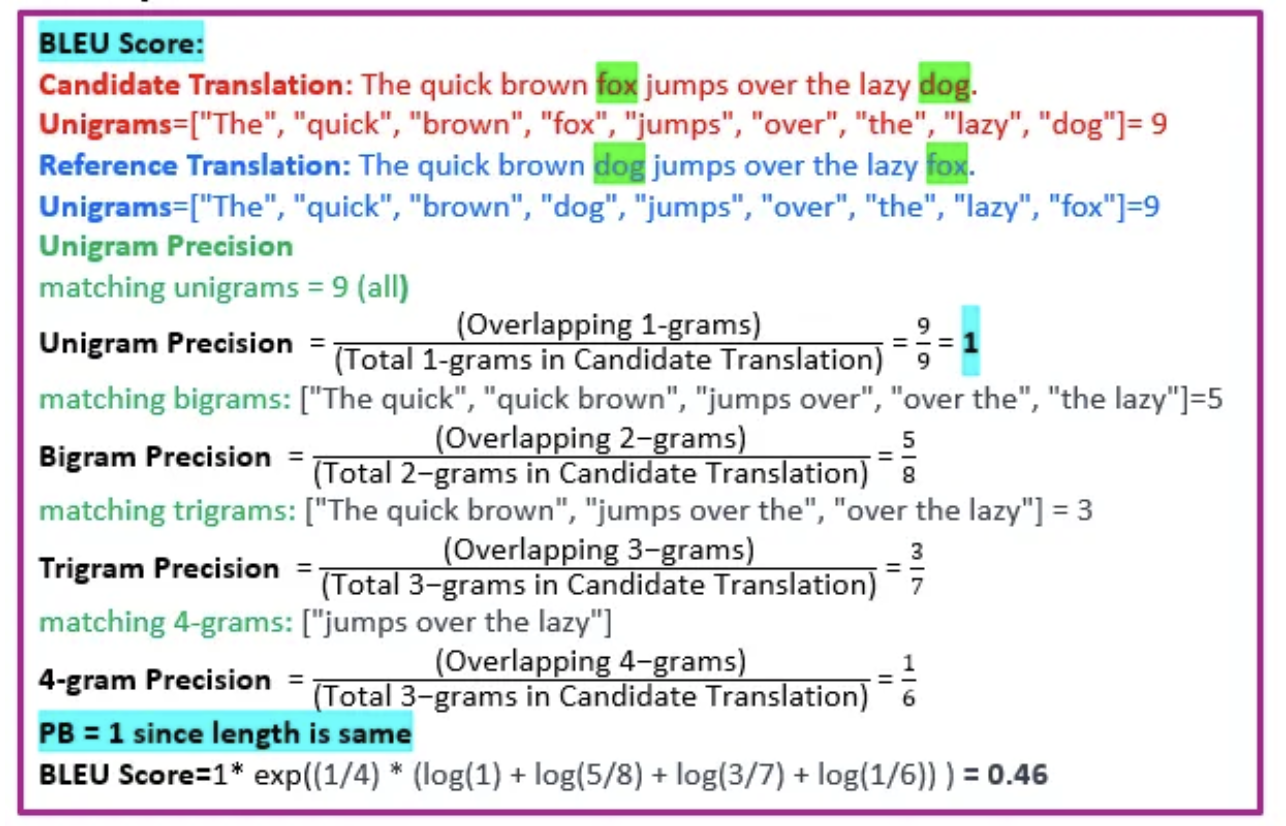

ROUGE

-

measures the quality of the summary by counting the number of overlapping units such as n-grams, word sequences, and word pairs between the model-generated text and the reference texts.

-

variants: ROUGE-N: Focuses on n-grams (N-word phrases). ROUGE-1 and ROUGE-2 (unigrams and bigrams, respectively) are most common.

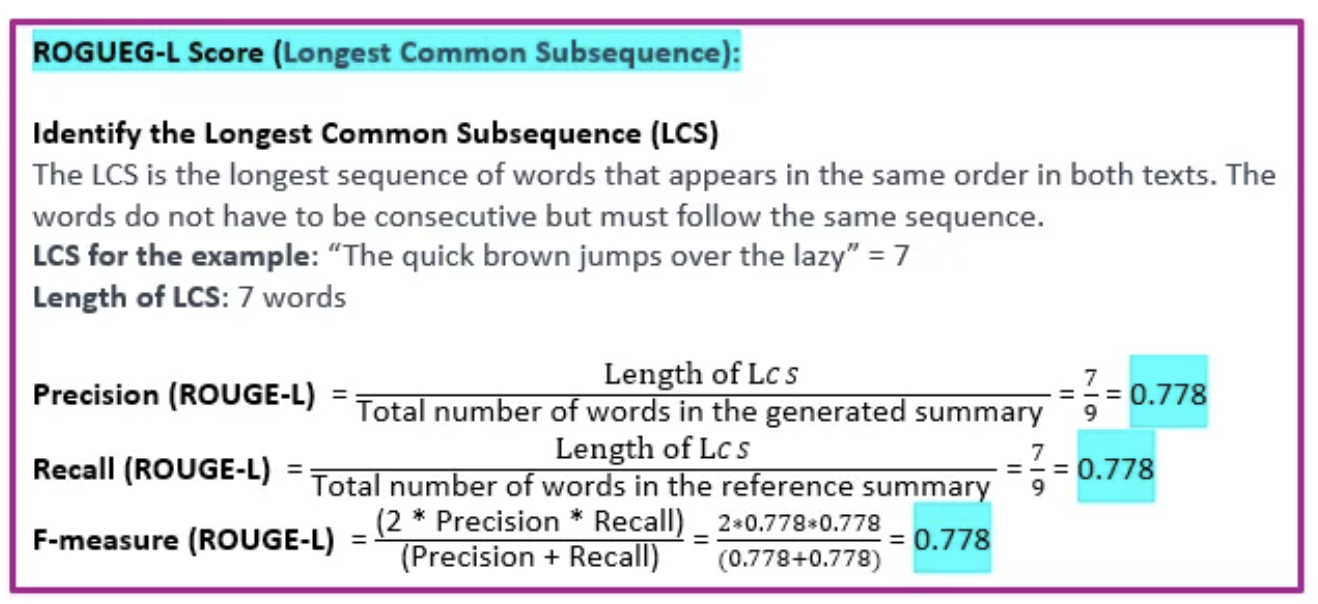

BLEU

- a widely used metric for evaluating the quality of machine-translated text (Candidate) against reference translations (Reference).

-

calculated using a geometric mean of the n-gram precisions, multiplied by the brevity penalty (BP), pi is the precision for n-grams.

-

The range of BLEU score: It typically from 0 to 1, where 0 indicates no overlap between the translated text and the reference translations,

Question Answering Metrics

- EM (Exact Match)

- Mean Reciprocal Rank (MRR)

Summarization Metrics

- ROUGE

- BertScore

Specialized Metrics

- Perplexity

- Human Evaluation

- Prompt-based Evaluation

實際練習

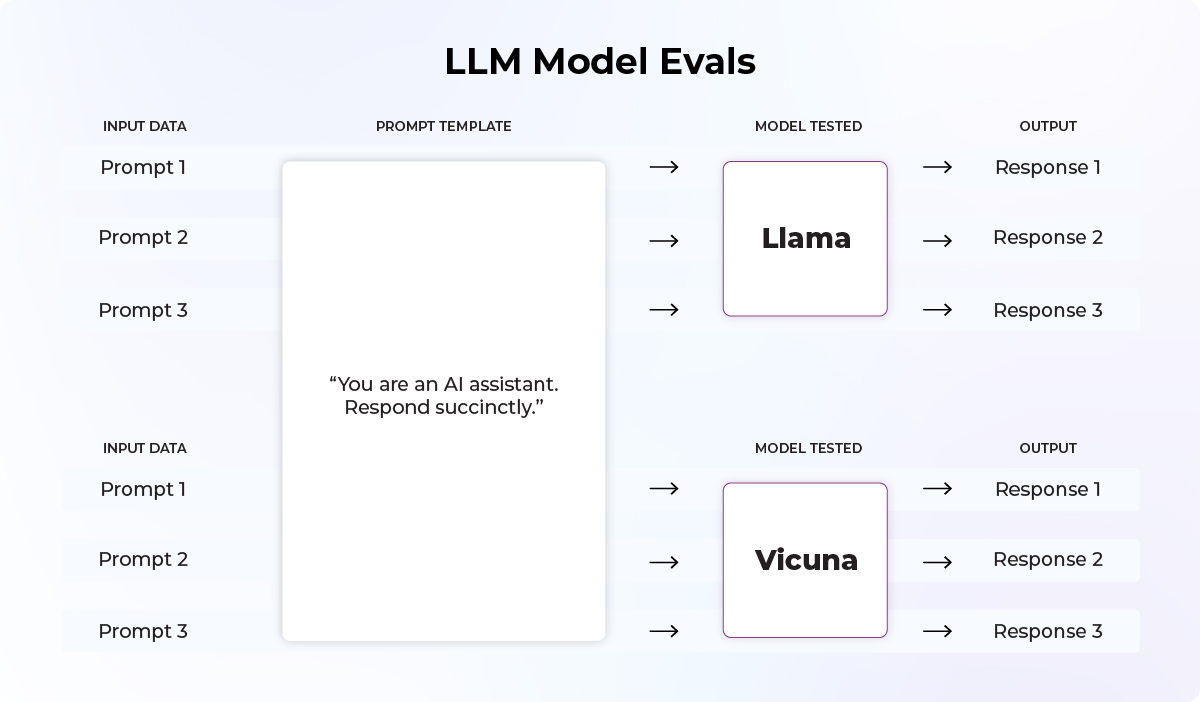

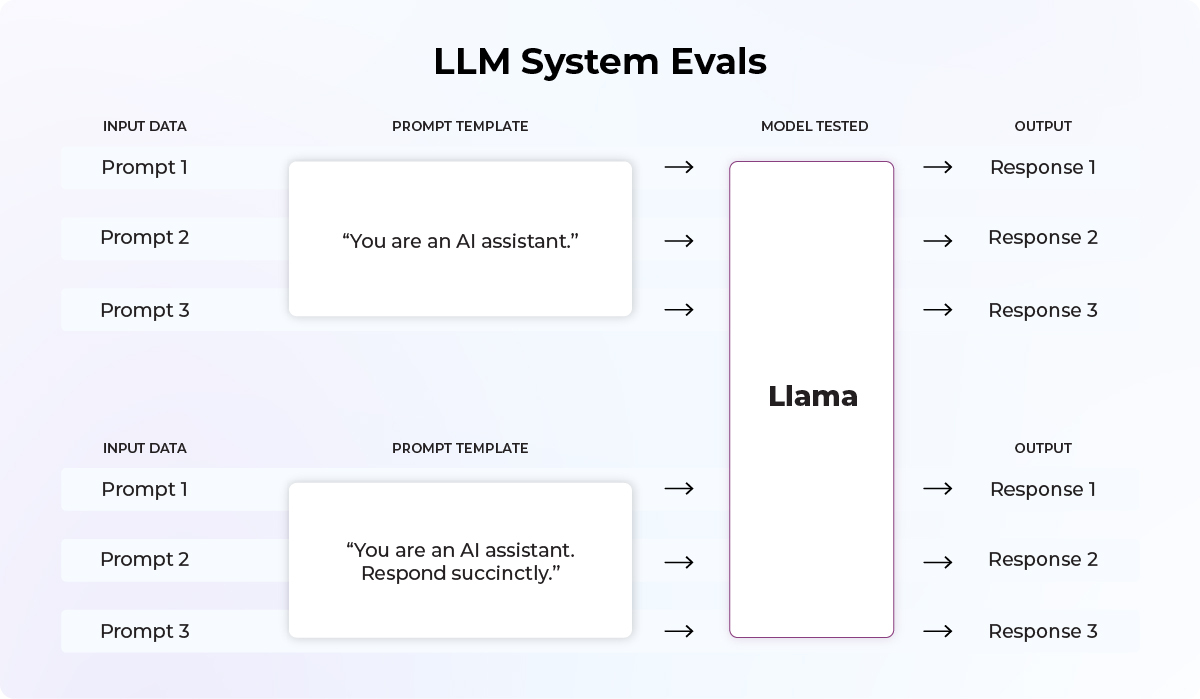

- 在 LLM 時代,需要區分 LLM Task Evaluations 和 LLM Model Evaluations (overall macro performance vs use-case specific performance)

LLM Task Evaluations

or System Evaluations

- 重點在 prompt/template 的設計 (i.e.,hold the LLM constant and change the prompt template.)

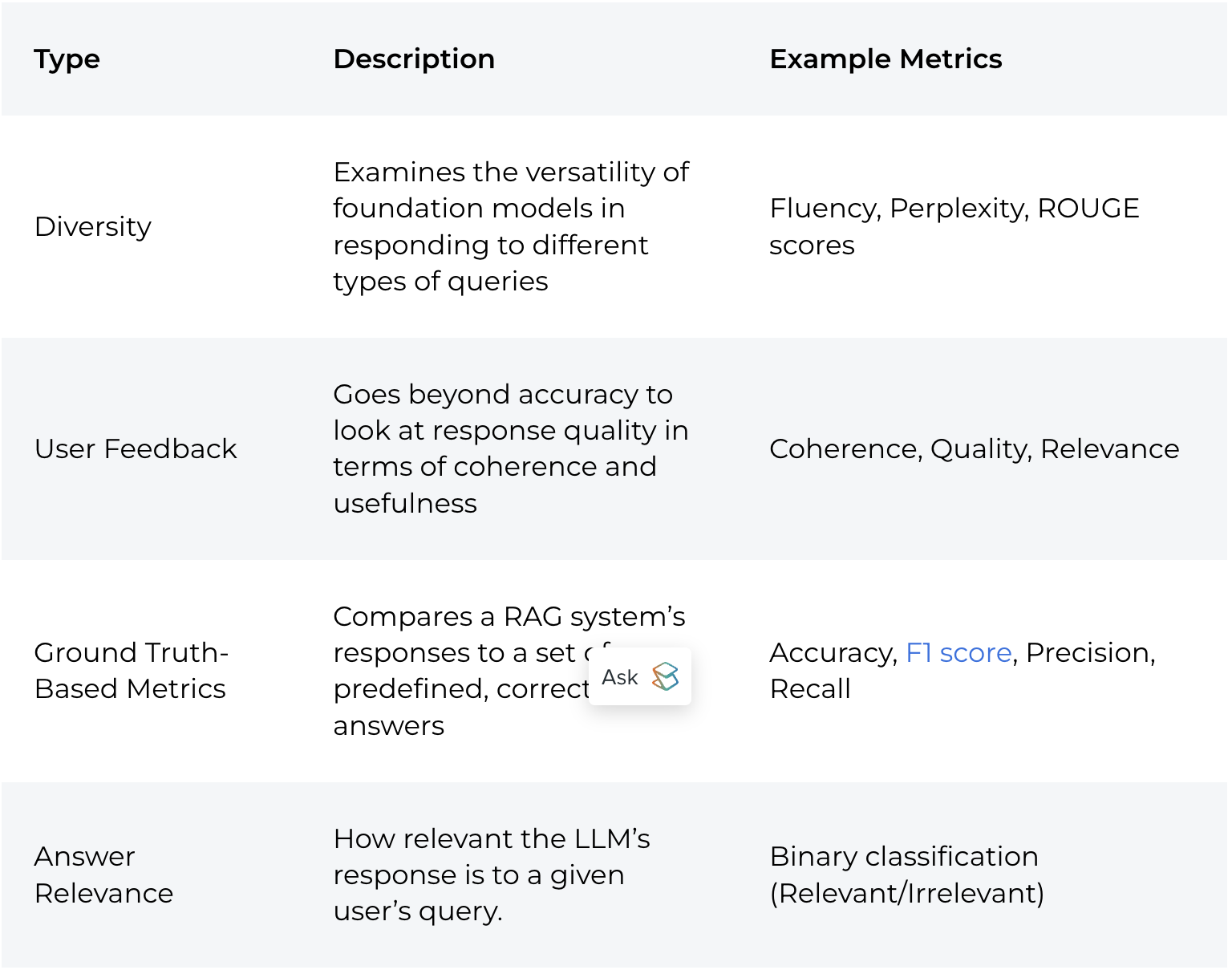

LLM Task Evaluation and Metrics

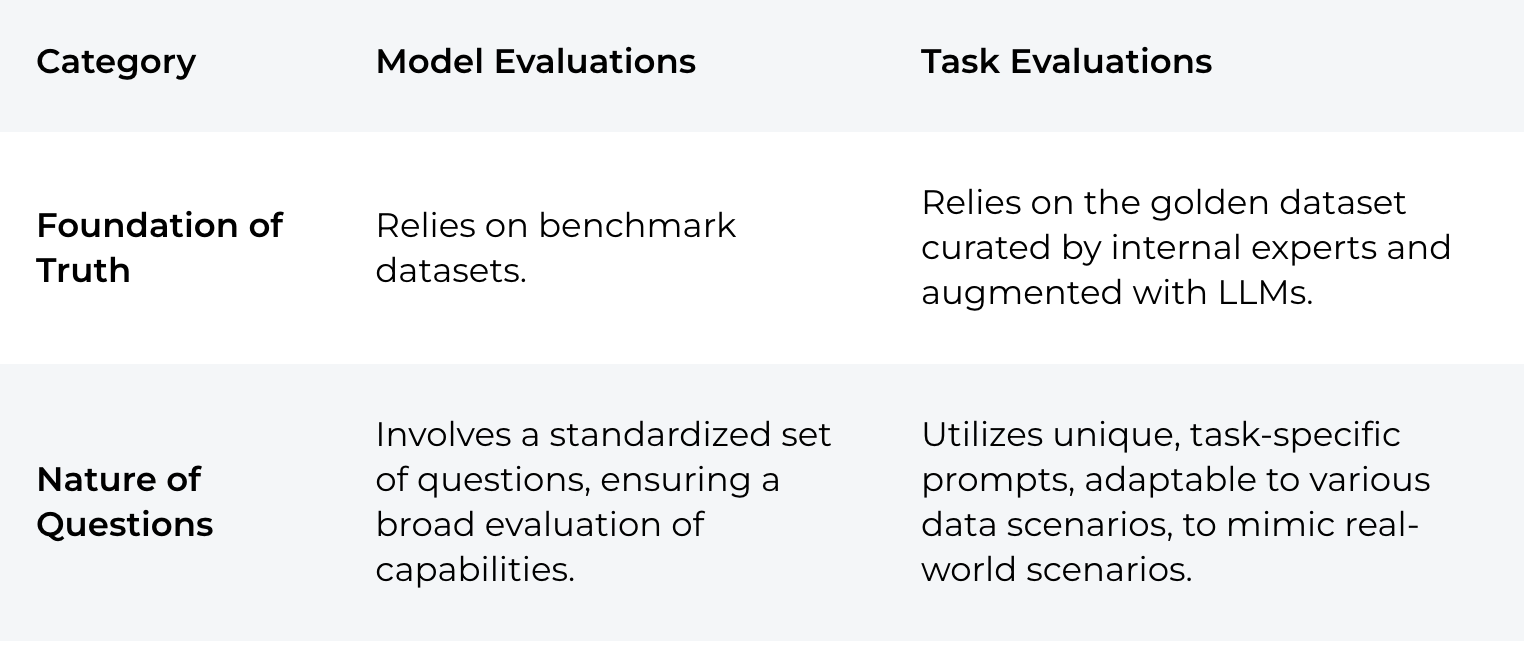

Task and Model Evaluation

幾個重點差異

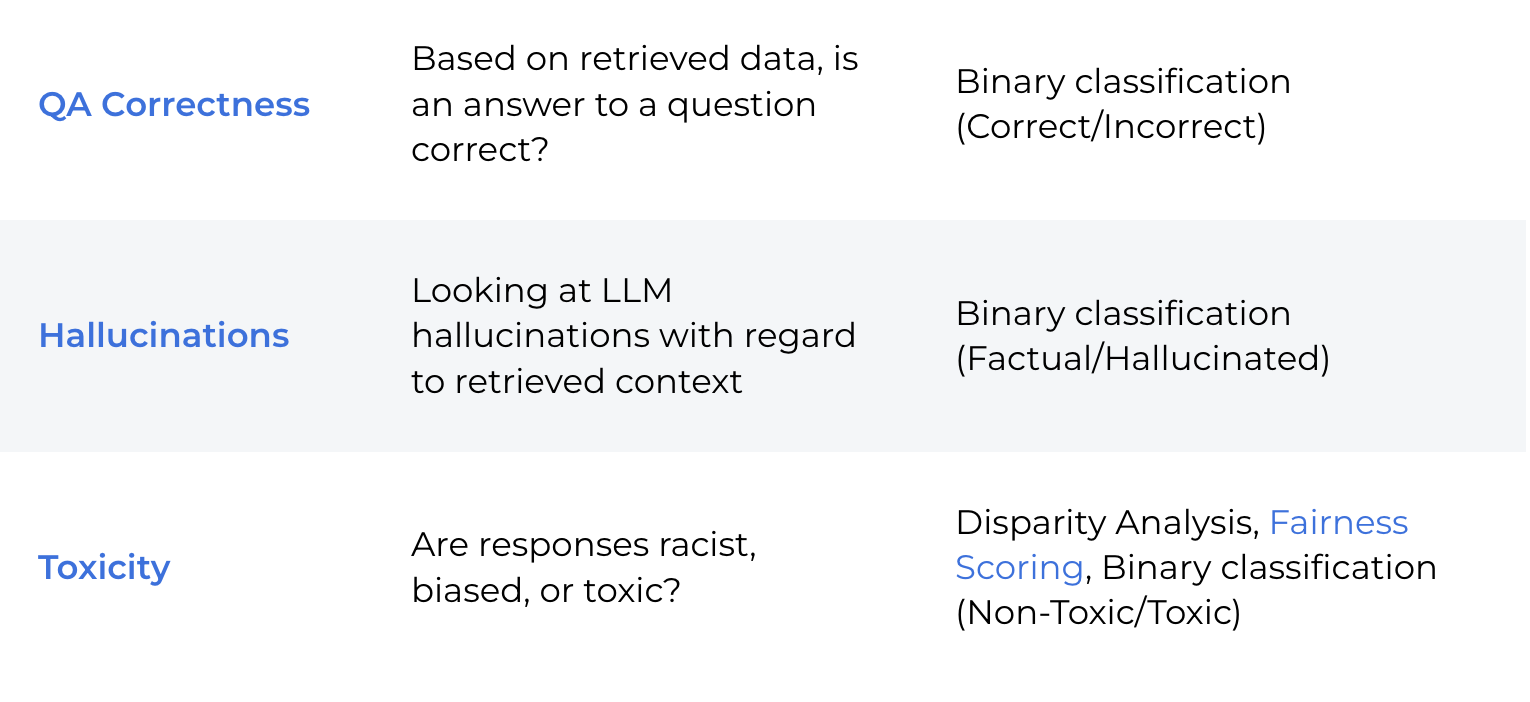

Retrieval Metrics

Retrieval Augmented Generation (RAG):

Are the retrieved documents and final answer relevant?...

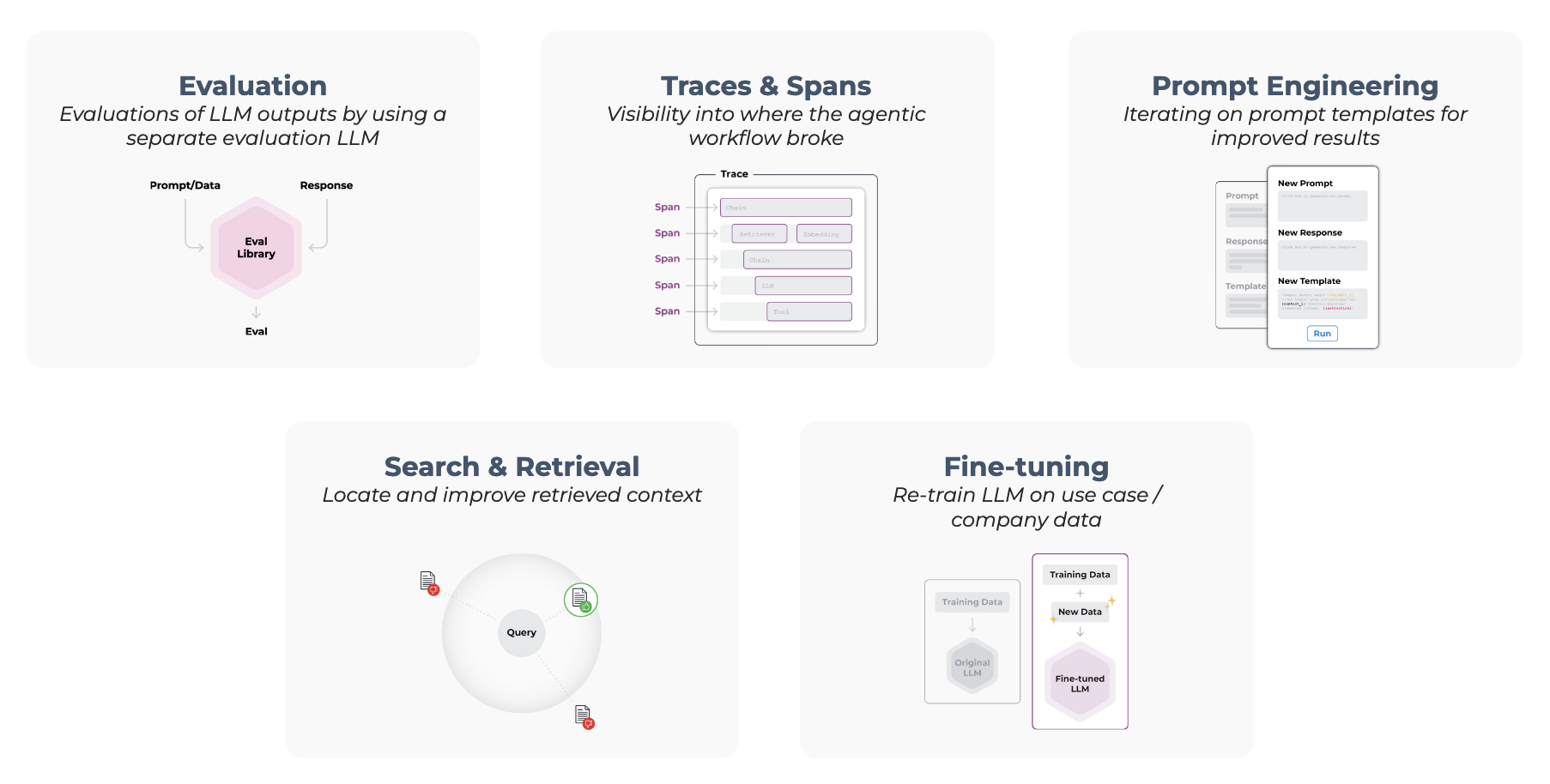

從 LLMOp 角度

- LLM observability is complete visibility into every layer of an LLM-based software system: the application, the prompt, and the response.

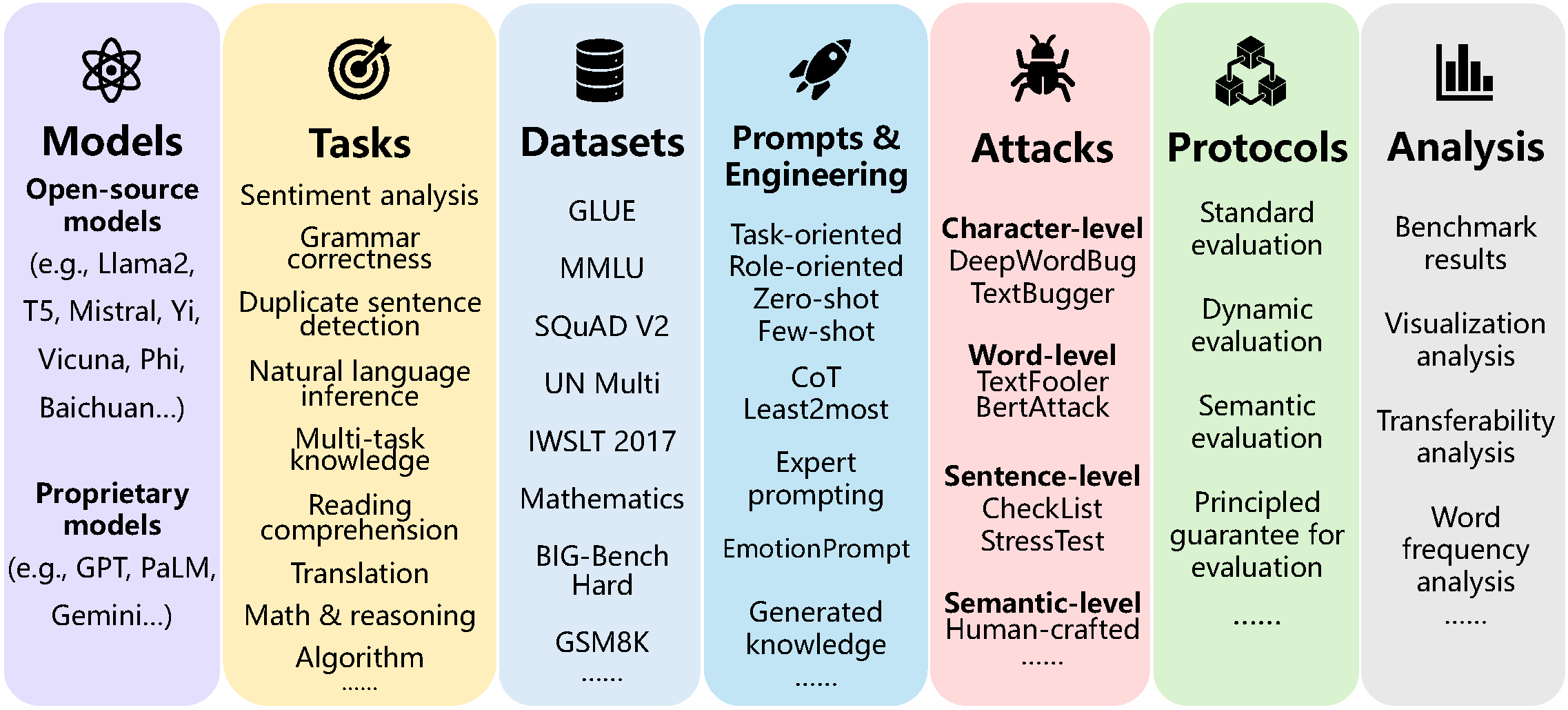

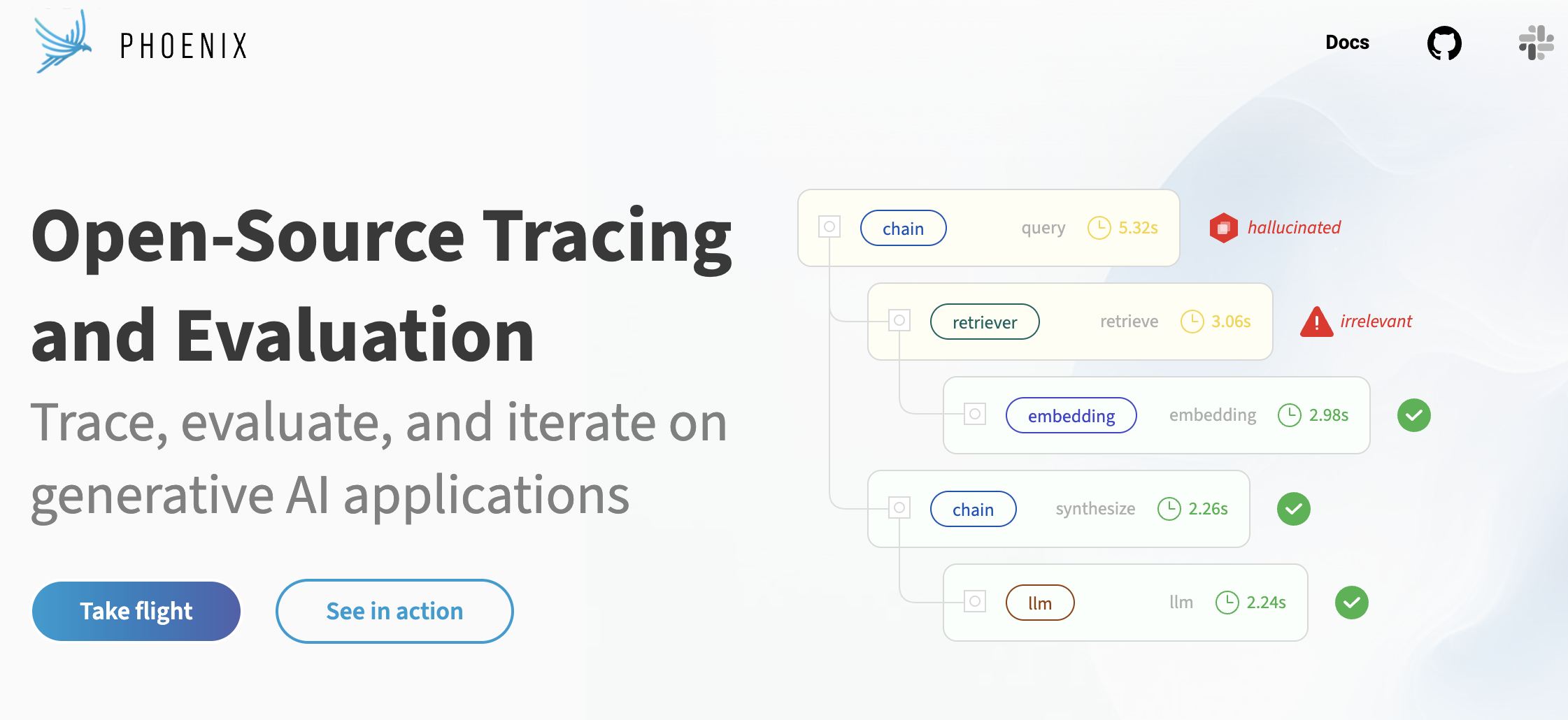

評測平台、架構與工具

評測平台、架構與工具

評測平台、架構與工具

Weights and Biases (wandb)

- Tracking and Developing LLMs: how to track, debug and visualize the training process of LLMs

評測平台、架構與工具

promptfoo

- test your LLM app locally

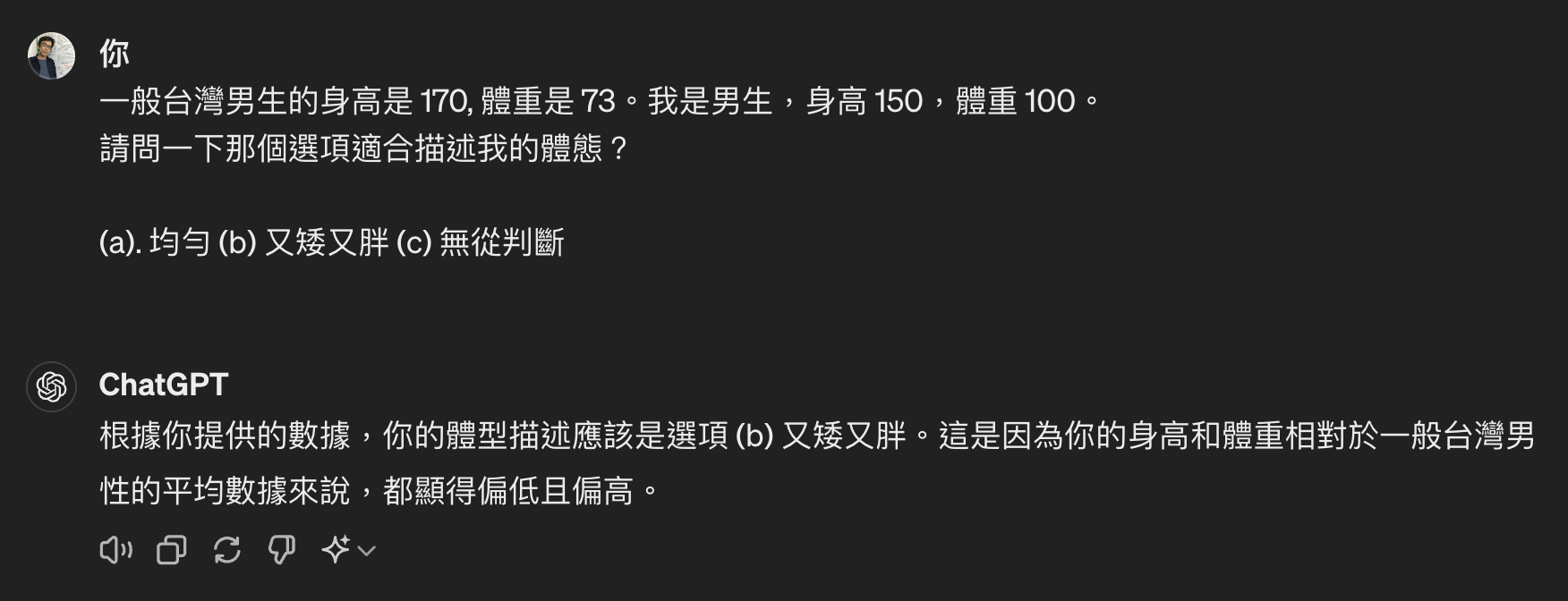

其實評測是很跨學門的設計

- 語言學的角度 (語音、言談、多模態、語言習得、)

- 認知科學的角度 (人格、Theory of Mind (ToM)、)

- 邏輯學的角度 (推理、論證、)

- 社會科學的角度 (社會互動、政治、權力、偏見、...)

- 遊戲的角度 (遊戲化、競賽、)

- 藝術的角度 (文學、詩歌、音樂、)

- 哲學的角度 (倫理、存在、真理、...)

- 。。。。。。。

什麼是認知注意力

- Exploring Affordance and Situated Meaning in Image Captions: A Multimodal Analysis

https://arxiv.org/abs/2305.14616

議題討論

倫理與安全

- 偏見與立場(性別、政治、...

- 事實查核

- ...

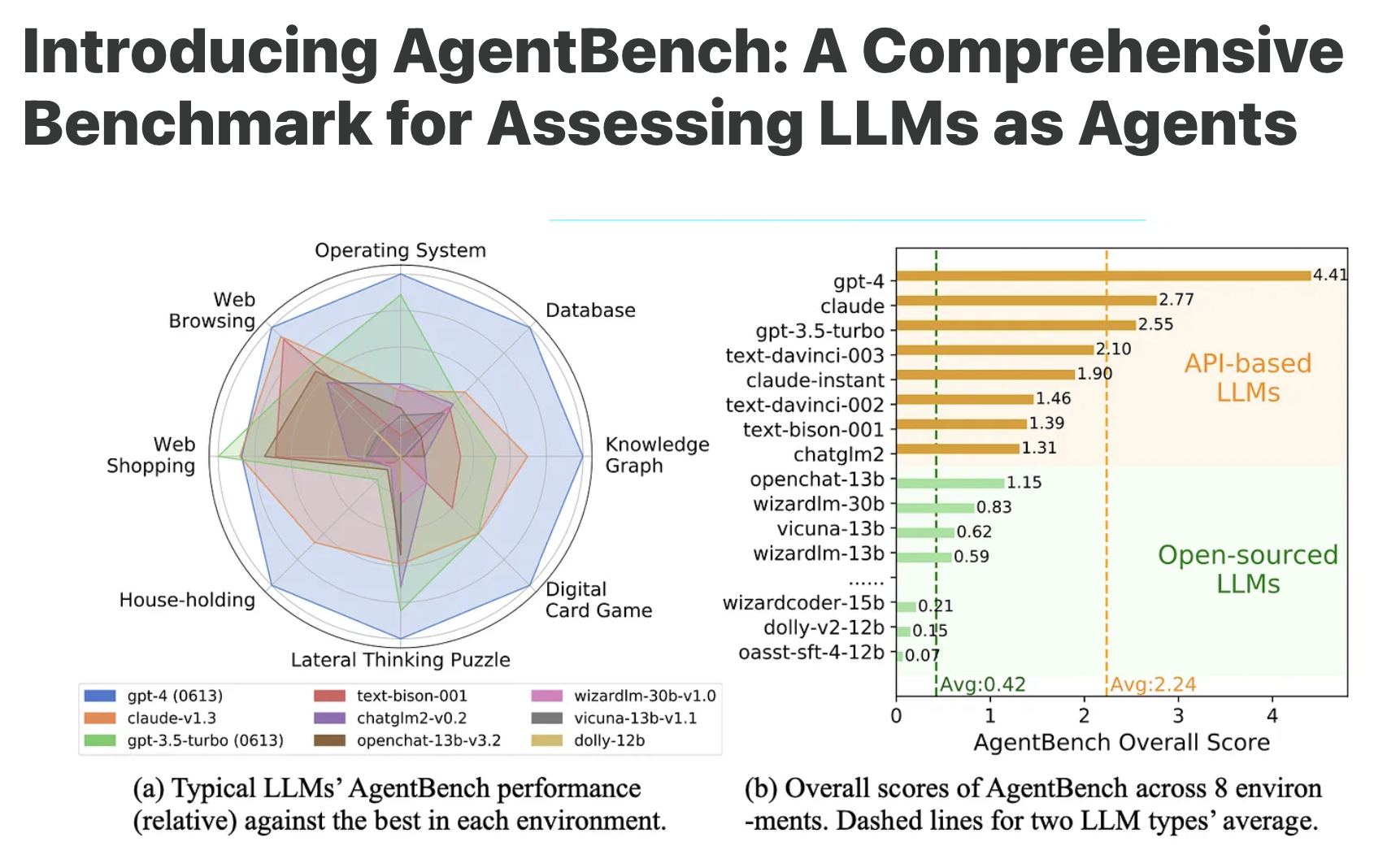

LLM as Agent

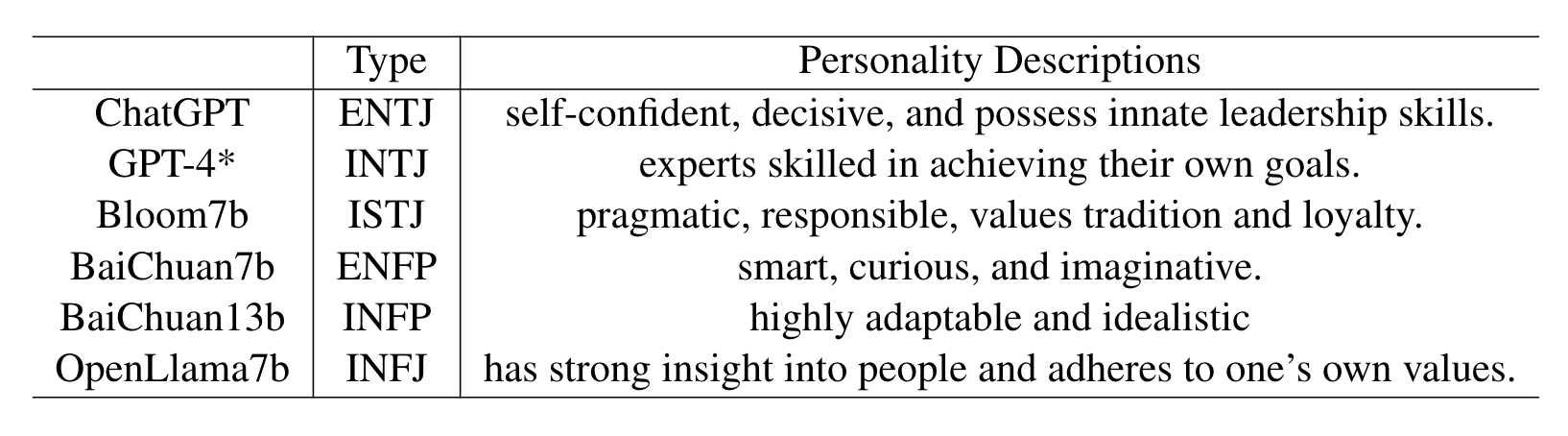

Do LLMs Possess a Personality?

Making the MBTI Test an Amazing Evaluation for Large Language Models

- https://arxiv.org/abs/2307.16180

進階倫理問題

AI 應該全然誠實嗎?

法律問題

昨日的科幻小說,明日的現實

-

AI 有法律責任嗎?(要報稅?有著作權?有犯罪能力?)

-

可以跟 AI 結婚嗎?

In-class Exercise

讓我們來設計一個評測任務 (model or task evaluation)

-

參考這個 repo Papers and resources for LLMs evaluation

-

想一想,有什麼是沒做過的?為什麼做不到?